pacman::p_load(emmeans,

ez, # get info on design of ANOVA

rstatix, # anova tables

cowplot,

tidyverse)39 Factorial ANOVA - The omnibus ANOVA and Main effects

Note that this week’s vignette assumes you have the following packages:

39.1 TLDR;

To run a factorial ANOVA we can use the aov() method we are familiar with. In this walkthrough we only focus on what can be done with the omnibus ANOVA assuming no interactions:

The general steps for running a factorial ANOVA:

- construct your ANOVA model using

lm - use the residuals of the model to test for normality and heteroscadicity

- test the model, checking for the presence of an interaction, and any main effects.

- If no interaction proceed with any necessary post hoc analyses on the main effects.

This week we will be focusing on Steps 1, 2, and 4, i.e., we won’t be formally looking at potential interaction effects. However, as we will see next week, analyzing and interpreting interaction effects is a critical part of factorial ANOVA.

For now, I want you focused on building intuitions about dealing with main effects.

39.1.1 Example

# Preliminaries

## load in data

dataset <- read_csv("http://tehrandav.is/courses/statistics/practice_datasets/factorial_ANOVA_dataset_no_interactions.csv")Rows: 36 Columns: 4

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (2): Lecture, Presentation

dbl (2): Score, PartID

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.# Step 1: create the additive ANOVA model

# note that this is not a full factorial model, but what we are focusing on this week:

aov_model <- aov(Score~Lecture + Presentation,data = dataset)

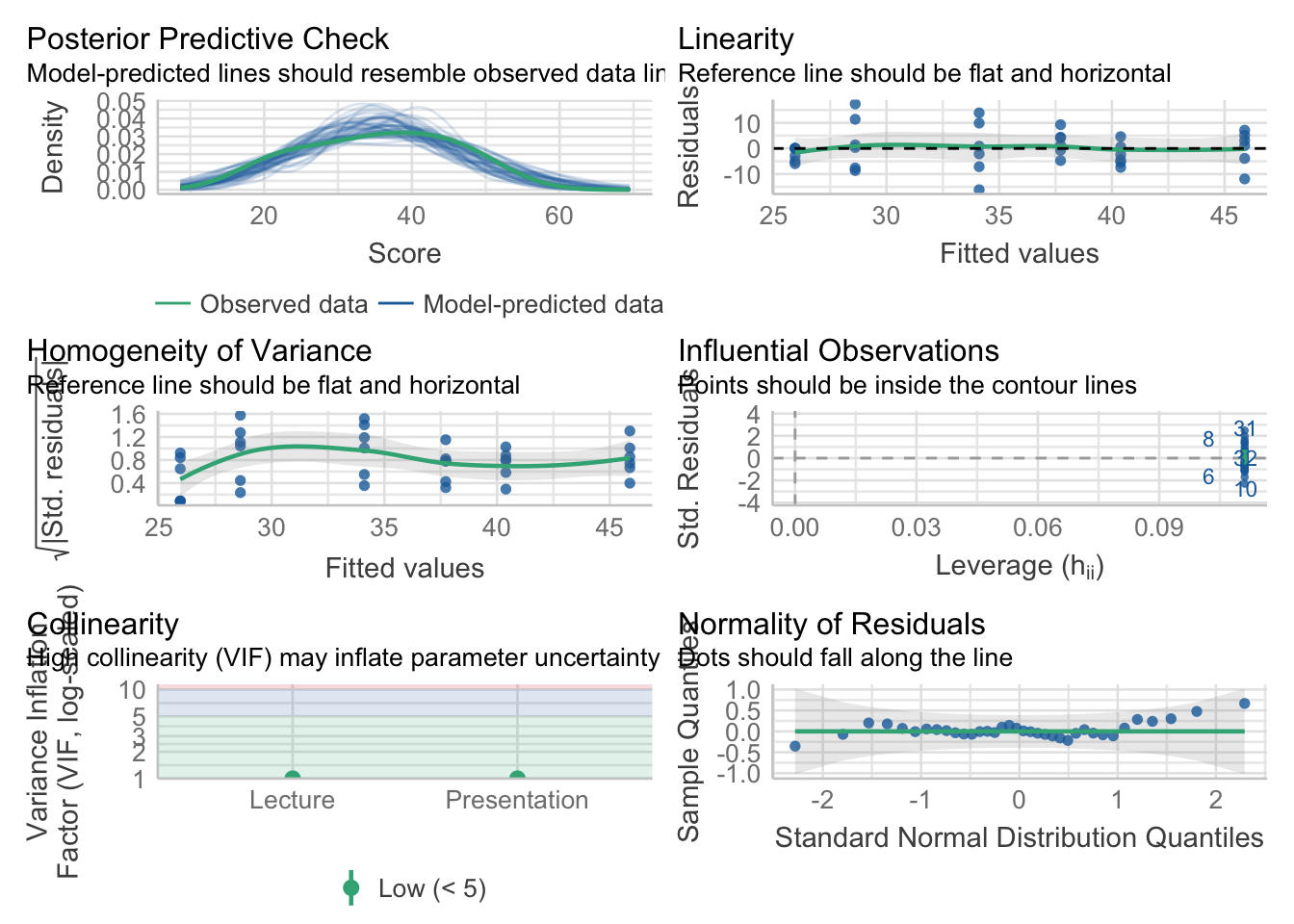

# Step 2a: test normalty / heteroscadicity using model visually, best to run from your console:

performance::check_model(aov_model)

# Step 2b: check normality and homogeneity assumptions using tests:

aov_model %>% performance::check_normality()OK: residuals appear as normally distributed (p = 0.887).aov_model %>% performance::check_homogeneity()Warning: Variances differ between groups (Bartlett Test, p = 0.040).# Step 3: check the F-ratio for significance

# note I'm only slecting columns from the anova table that are relevant to us:

aov_model %>% anova_test(effect.size = "ges")ANOVA Table (type II tests)

Effect DFn DFd F p p<.05 ges

1 Lecture 2 32 3.779 3.40e-02 * 0.191

2 Presentation 1 32 22.670 3.97e-05 * 0.415# Step 4: Post hoc analyses on main effects

emmeans(aov_model, specs = pairwise~Lecture, adjust="tukey")$emmeans

Lecture emmean SE df lower.CL upper.CL

Hist 34.5 2.14 32 30.1 38.9

Phys 40.0 2.14 32 35.6 44.4

Soc 31.8 2.14 32 27.5 36.2

Results are averaged over the levels of: Presentation

Confidence level used: 0.95

$contrasts

contrast estimate SE df t.ratio p.value

Hist - Phys -5.50 3.03 32 -1.815 0.1807

Hist - Soc 2.67 3.03 32 0.880 0.6565

Phys - Soc 8.17 3.03 32 2.696 0.0291

Results are averaged over the levels of: Presentation

P value adjustment: tukey method for comparing a family of 3 estimates emmeans(aov_model, specs = pairwise~Presentation, adjust="tukey")$emmeans

Presentation emmean SE df lower.CL upper.CL

Comp 41.3 1.75 32 37.8 44.9

Stand 29.6 1.75 32 26.0 33.1

Results are averaged over the levels of: Lecture

Confidence level used: 0.95

$contrasts

contrast estimate SE df t.ratio p.value

Comp - Stand 11.8 2.47 32 4.761 <.0001

Results are averaged over the levels of: Lecture 39.2 Analysis of Variance: Factorial ANOVA

In this week’s vignette we are simply building upon the previous two weeks coverage of One-way ANOVA and multiple comparisons. I’m assuming you’ve taken a look at all of the assigned material related to these topics. This week we up the ante by introducing more complex ANOVA models, aka factorial design. As we discussed in class, a factorial ANOVA design is required (well, for the purposes of this course) when your experimental design has more than one IV. Our examples this week focus on situations involving two IVs, however, what is said here applies for more complex designs involving 3, 4, 5, or however many IV’s you want to consider. Well, maybe not however many… as we we’ll see this week and the next, the more IVs you include in your analysis, the more difficult interpreting your results becomes. This is especially true if you have interaction effects running all over the place. But perhaps I’m getting a little bit ahead of myself. Let’s just way I wouldn’t recommend including more than 3 or 4 IVs in your ANOVA at a single time and for now leave it at that.

39.3 Main effect, main effect, and interactions… oh my!

When we are performing a factorial ANOVA we are performing a series of independent comparisons of means as a function of our IVs (this assumption of independence is one of the reasons that we don’t typically concern ourselves with adjusting our p-values in the omnibus factorial ANOVA). For any given number of IVs, or factors, we test for a main effect of that factor on the data—that is “do means grouped by levels within that factor differ from one another not taking into consideration the influence of any of the other IVs”. Our tests for interactions do consider the possibility that our factors influence one another—that is,”do the differences that are observed in one factor depend on the intersecting level of another?”

For the sake of simplicity, we will start with a 2 × 2 ANOVA and work our way up by extending the data set. Given our naming conventions, saying that we have a 2 × 2 ANOVA indicates that there are 2 IVs and each has 2 levels. A 2 × 3 ANOVA indicates that there are 2 IVs, and that the first IV has 2 levels and the second has 3 levels; a 2 × 3 × 4 ANOVA indicates that we have 3 IVs, the first has 2 levels, the second has 3 levels, and the third has 4 levels.

Our example ANOVA comes from a study testing the effects of smoking on performance in different types of putatively (perhaps I’m showing my theoretical biases here) information processing tasks.

There were 3 types of cognitive tasks:

1 = a pattern recognition task where participants had to locate a target on a screen;

2 = a cognitive task where participants had to read a passage and recall bits of information from that passage later;

3 = participants performed a driving simulation.

Additionally, 3 groups of smokers were recruited

1 = those that were actively smoking prior to and during the experiment;

2 = those that were smokers, but did not smoke 3 hours prior to the experiment;

3 = non-smokers.

As this is a between design, each participants only completed one of the cognitive tasks.

39.4 Example 1: a 2×2 ANOVA

Let’s grab the data from from the web. Note that for now we are going to ignore the covar column.

dataset <- read_delim("https://www.uvm.edu/~statdhtx/methods8/DataFiles/Sec13-5.dat", "\t", escape_double = FALSE, trim_ws = TRUE)Rows: 135 Columns: 4

── Column specification ────────────────────────────────────────────────────────

Delimiter: "\t"

dbl (4): Task, Smkgrp, score, covar

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.dataset# A tibble: 135 × 4

Task Smkgrp score covar

<dbl> <dbl> <dbl> <dbl>

1 1 1 9 107

2 1 1 8 133

3 1 1 12 123

4 1 1 10 94

5 1 1 7 83

6 1 1 10 86

7 1 1 9 112

8 1 1 11 117

9 1 1 8 130

10 1 1 10 111

# ℹ 125 more rowsLooking at this data, the first think that we need to do is recode the factors. Recall that we can do this using fct_recode…

39.4.1 Ninja-up

As an alternative to mutate(), you can add a new column to a data frame by invoking dataframe$new_column. This saves us a bit of typing. Also, for weeks I’ve told you not to overwrite existing columns, and I maintain that is best practice for beginners. However, in this example we will overwrite.

dataset$Task <- fct_recode(factor(dataset$Task), "Pattern Recognition" = "1", "Cognitive" = "2", "Driving Simulation" = "3")

dataset$Smkgrp <- fct_recode(factor(dataset$Smkgrp), "Nonsmoking" = "3", "Delayed" = "2", "Active" = "1")To get a quick view of our data structure we can use two kinds of calls:

summary() provides us with info related to each column in the data frame. If a column contains a factor it provides frequency counts of each level. It the column is numeric it provides summary stats:

summary(dataset) Task Smkgrp score covar

Pattern Recognition:45 Active :45 Min. : 0.00 Min. : 64.0

Cognitive :45 Delayed :45 1st Qu.: 6.00 1st Qu.: 99.0

Driving Simulation :45 Nonsmoking:45 Median :11.00 Median :111.0

Mean :18.26 Mean :112.5

3rd Qu.:26.00 3rd Qu.:128.0

Max. :75.00 Max. :191.0 In addition, I like to use the ezDesign() function from the ez package to get a feel for counts in each cell. This is useful for identifying conditions that may have missing data.

ez::ezDesign(data = dataset,

x=Task, # what do you want along the x-axis

y=Smkgrp, # what do you want along the y-axis

row = NULL, # are we doing any sub-divisions by row...

col = NULL) # or column

This provides us with a graphic representation of cell counts. In this case, every condition (cell) has 15 participants. As you can see right now this is a 3 x 3 ANOVA.

To start, let’s imagine that we are only comparing the active smokers to the nonsmokers, and that we are only concerned with the pattern recognition v driving simulation. In this circumstance we are running a 2 (smoking group: active v. passive) × 2 (task: pattern recognition v. driving simulation) ANOVA. We can do a quick subsetting of this data using the filter() command. For our sake, let’s create a new object with this data, dataset_2by2:

# subsetting the data. Remember that "!=" means "does not equal"; "&" suggests that both cases must be met, so

dataset_2by2 <- filter(dataset, Smkgrp!="Delayed" & Task!="Cognitive")To get a quick impression of what this dataset looks like, we can use the summary() function, or ezDesign():

# getting a summary of dataset_2by2:

summary(dataset_2by2) Task Smkgrp score covar

Pattern Recognition:30 Active :30 Min. : 0.00 Min. : 64.00

Cognitive : 0 Delayed : 0 1st Qu.: 2.75 1st Qu.: 98.75

Driving Simulation :30 Nonsmoking:30 Median : 8.00 Median :111.00

Mean : 7.90 Mean :110.73

3rd Qu.:11.00 3rd Qu.:123.00

Max. :22.00 Max. :168.00 ez::ezDesign(data = dataset_2by2,

x=Task, # what do you want along the x-axis

y=Smkgrp, # what do you want along the y-axis

row = NULL, # are we doing any sub-divisions by row...

col = NULL) # or column

You may notice from the summary above that the groups that were dropped “Delayed” smokers and “Cognitive” task still show up in the summary, albeit now with 0 instances (you’ll also notice that the remaining groups decreased in number, can you figure out why?). In most cases, R notices this an will automatically drop these factors in our subsequent analyses. However, if needed (i.e. it’s causing errors), these factors can be dropped by invoking the droplevels()function like so:

dataset_2by2 <- dataset_2by2 %>% droplevels()

summary(dataset_2by2) Task Smkgrp score covar

Pattern Recognition:30 Active :30 Min. : 0.00 Min. : 64.00

Driving Simulation :30 Nonsmoking:30 1st Qu.: 2.75 1st Qu.: 98.75

Median : 8.00 Median :111.00

Mean : 7.90 Mean :110.73

3rd Qu.:11.00 3rd Qu.:123.00

Max. :22.00 Max. :168.00 And to see the cell counts:

ez::ezDesign(data = dataset_2by2, x=Task,y=Smkgrp,row = NULL,col = NULL)

39.5 Making sense of plots

Let’s go ahead and plot the data using a line plot with 95% CI error bars. Note that these plots (up until the last section) are not APA-complete!!!!

39.5.1 Interaction plots

Interaction plots take into consideration the influence of each of the IVs on one another—in this case the mean and CI of each smoking group (Active v. Nonsmoking) as a function of Task (Driving Simulation v. Pattern Recognition). There is an additional consideration when plotting multiple predictors. That said, all we are doing is extending the plotting methods that you have been using for the past few weeks. The important addition here is the addition of group= in the first line the ggplot. For example:

ggplot2::ggplot(data = dataset_2by2, mapping=aes(x=Smkgrp,y=score,group=Task))indicates that we are:

using the

dataset_2by2data setputting first IV,

Smkgrp, on the x-axisputting our dv,

scoreon the y-axisand grouping our data by our other IV,

Task

This last bit is important as it makes clear that the resulting mean plots should be of the cell means related to Smkgrp x Task

For example, a line plot might look like this. Note that I am assigning a different shape and linetype to each level of Task:

# line plot

ggplot(data = dataset_2by2, mapping=aes(x=Smkgrp,

y=score,

group=Task,

shape = Task)) +

stat_summary(geom="pointrange",

fun.data = "mean_se") +

stat_summary(geom = "line",

fun = "mean",

aes(linetype = Task)) +

theme_cowplot()

One thing you may have noticed is that is very difficult to distinguish between my Task groups at the Non-smoking level, the points and error bars are overlapping one another. One way to fix this is to use the position_dodge() function. This effectively shifts the data points to the left and right to separate them from one another. You need to perform an identical position_dodge() for each element you intend to shift. In this case, we need to include it in both the pointrange and line parts of our both.

Rewriting the above (while also getting rid of color per APA format)

# line plot with dodging

ggplot(data = dataset_2by2,

mapping=aes(x=Smkgrp,

y=score,

group=Task,

shape = Task)

) +

stat_summary(geom="pointrange",

fun.data = "mean_se",

position = position_dodge(0.25),

) +

stat_summary(geom = "line",

fun = "mean",

aes(linetype = Task),

position = position_dodge(0.25)

) +

theme_cowplot()

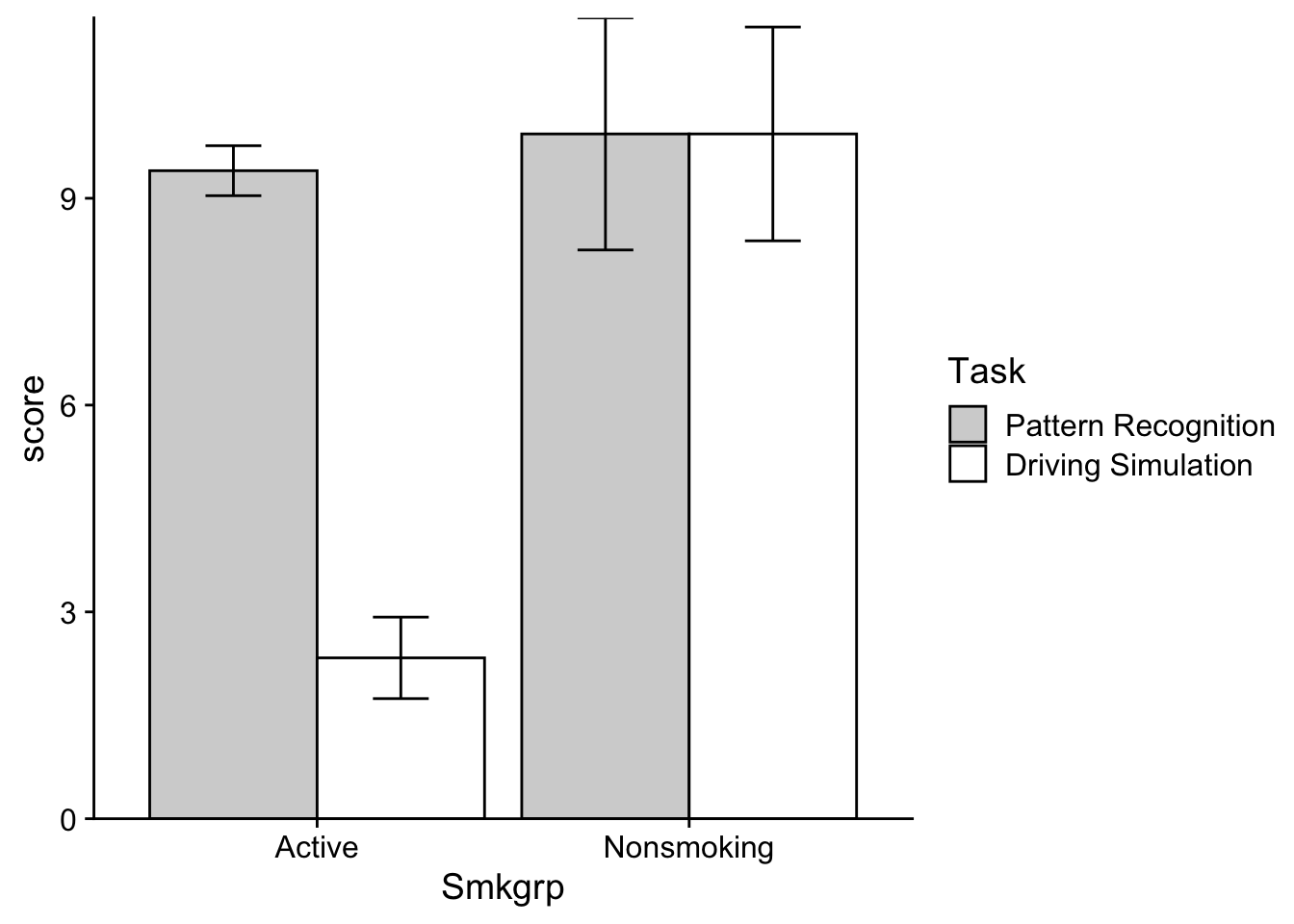

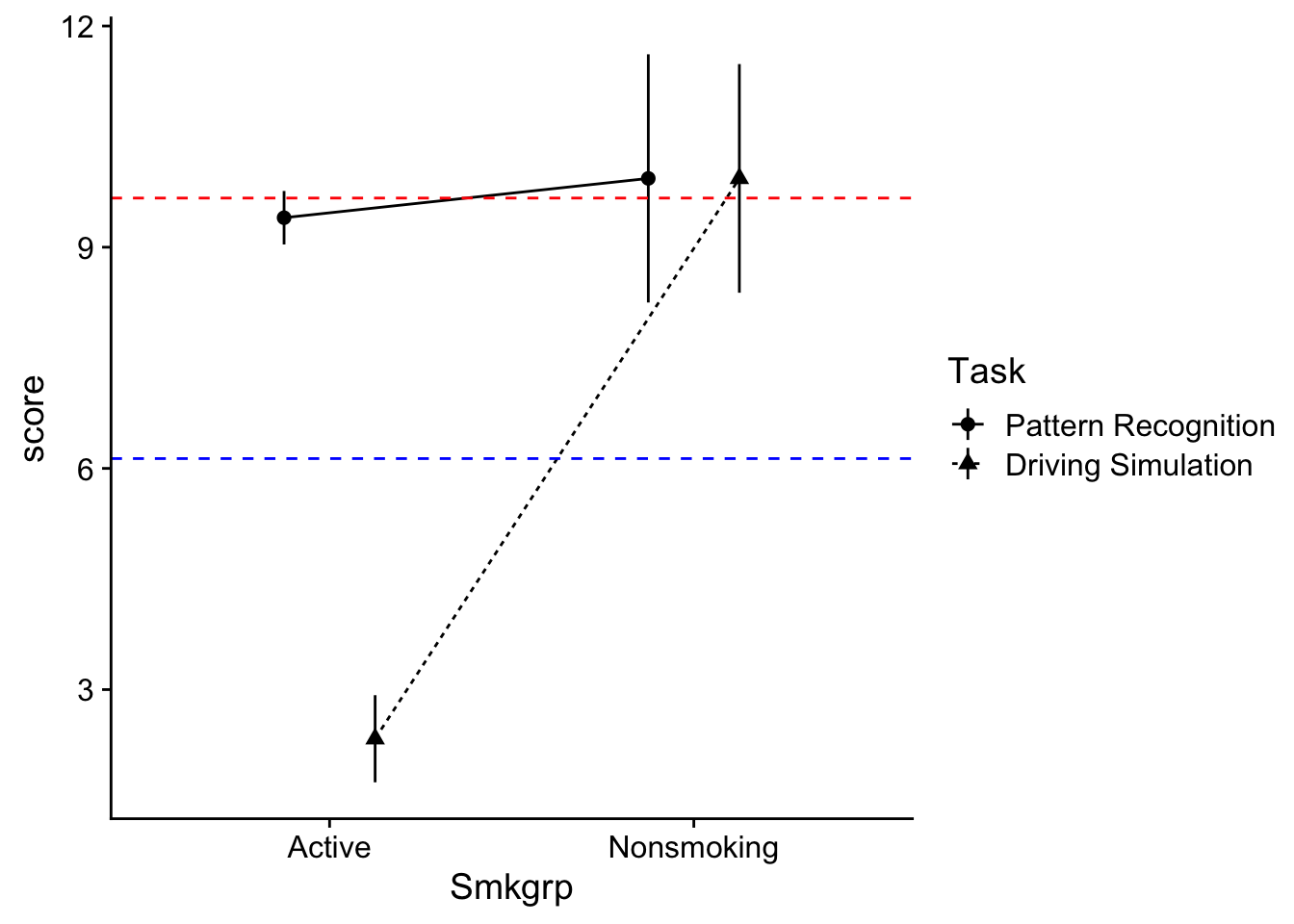

A brief inspection of the plot can be quite informative. Let’s start with the interaction, in fact: you should always start with the interaction. Since this is an “interaction” plot, often a quick visual inspection will allow us to predict whether our subsequent ANOVA will likely yield an interaction effect (it’s good practice to plot your data before running your ANOVA). A simple rule of thumb is that if you see the lines converging or intersecting then more than likely an interaction is present. However, whether it’s statistically significant is another question. You might think that this rule of thumb is useful if you use a line plot, and well, you’d be right. What about a bar plot, you ask? * Note that when generating a grouped barplot, you may need to position dodge to ensure your bars don’t overlap. Typically selecting a value of 0.9 ensures they do not overlap, but also that your grouped bars are touching one another with no gap between. For practice try changing the position_dodge values in the plot below to see how that effects the plot.

# barplot

ggplot(data = dataset_2by2, mapping=aes(x=Smkgrp,y=score,group=Task)) +

stat_summary(geom = "bar",

fun = "mean",

color="black",

aes(fill=Task),

position=position_dodge(.9)) +

stat_summary(geom="errorbar",

width=.3,

fun.data = "mean_se",

position = position_dodge(.9)) +

theme(legend.position="none") +

scale_fill_manual(values = c("light grey", "white")) +

theme_cowplot() +

# fix annoying gap at bottom of barplot

scale_y_continuous(expand = c(0,0))

Note that the grey fills above are the “Driving Simulation” group and the white are the “Pattern Recognition”. Take a look at the means. If the relative difference between grouped means changes as you move from one category on the x-axis to the next, you likely have an interaction. Note that this is a general rule of thumb and applies to the line plots as well (the reason that the lines intersect is because of these sorts of changes). In this case, the bar (cell) means on the “Active” grouping are nearly identical, while the bar means in the “Nonsmoking” grouping are much further apart. So we likely have interaction.

39.5.2 Plotting main effects

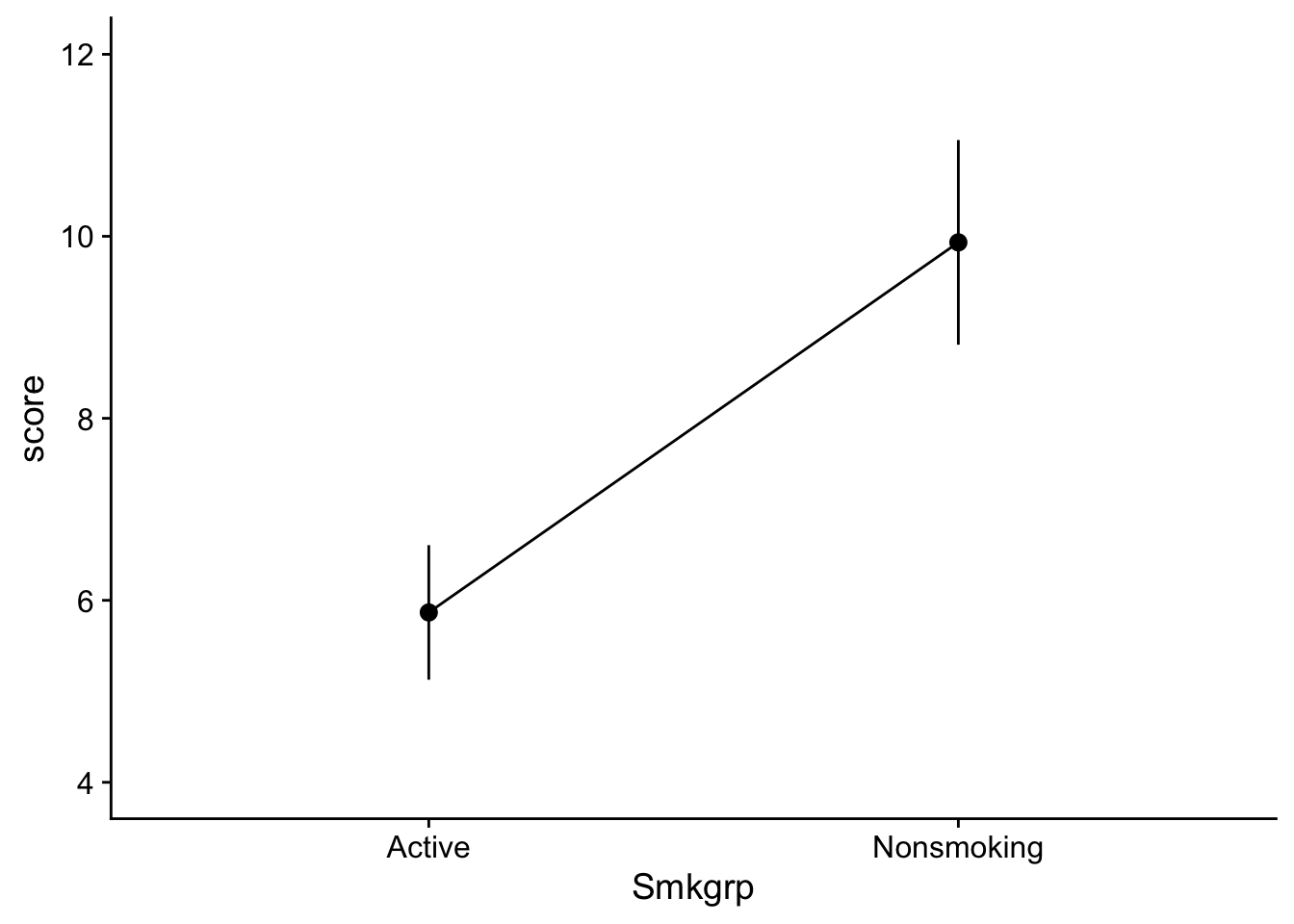

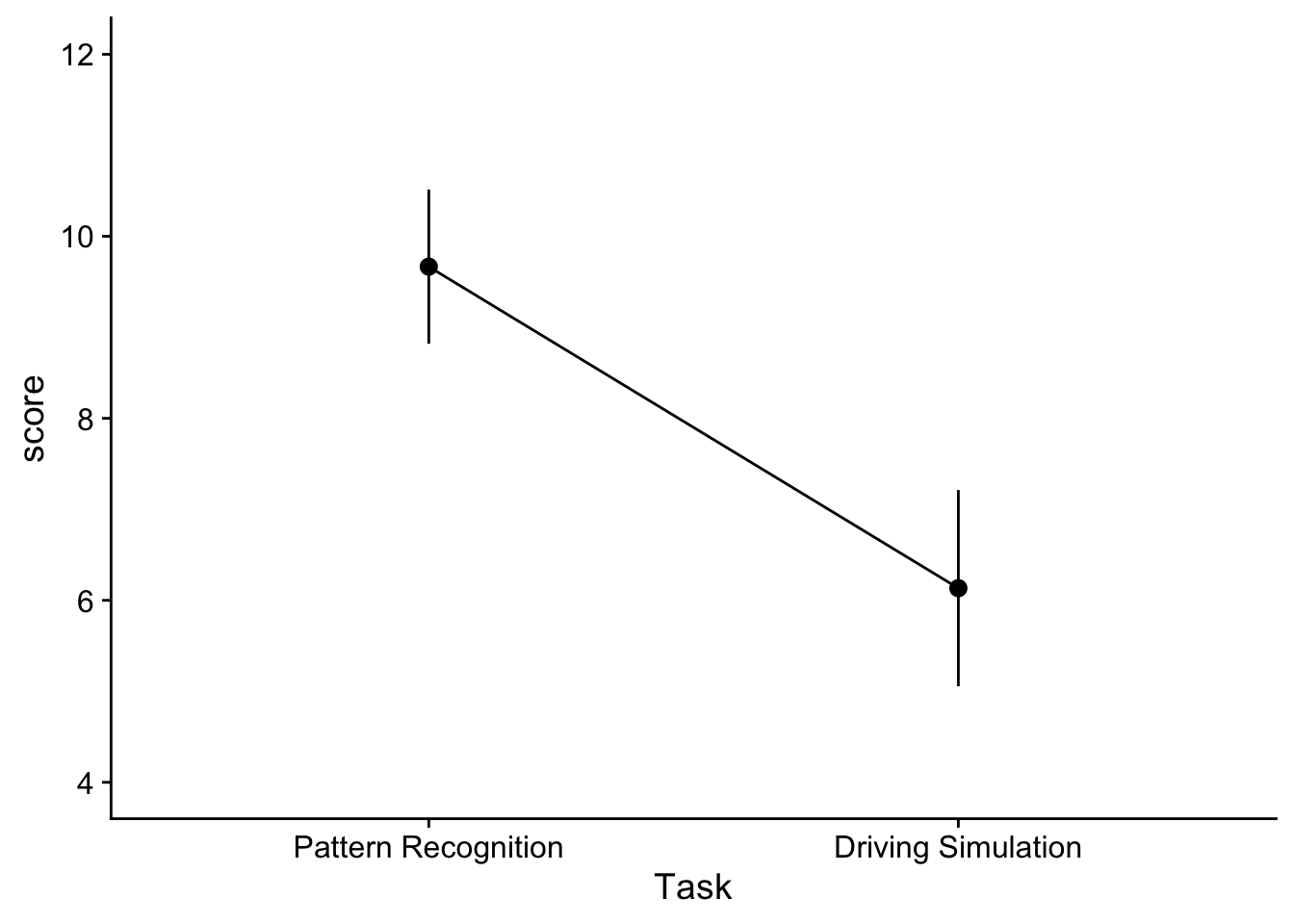

If we wanted we could also create separate plots related to our mean effects. Remember that a main effect takes a look at the difference in means for one IV independent of the other IV(s). For example, consider Smkgrp, we are looking at the means of Nonsmoking and Active indifferent to Task. These plots would look something like this:

Smoke_main_effect_plot <- ggplot2::ggplot(data = dataset_2by2, mapping=aes(x=Smkgrp,y=score, group=1)) +

stat_summary(geom="pointrange",

fun.data = "mean_se",

position=position_dodge(0)) +

stat_summary(geom = "line",

fun = "mean",

position=position_dodge(0)) +

coord_cartesian(ylim=c(4,12)) +

theme_cowplot()

show(Smoke_main_effect_plot)

Task_main_effect_plot <- ggplot2::ggplot(data = dataset_2by2, mapping=aes(x=Task,y=score, group=1)) +

stat_summary(geom="pointrange",

fun.data = "mean_se",

position=position_dodge(0)) +

stat_summary(geom = "line",

fun = "mean",

position=position_dodge(0)) +

coord_cartesian(ylim=c(4,12)) +

theme_cowplot()

show(Task_main_effect_plot)

and would take a look at changes due to each IV without considering the other. Here we might infer that there is a main effect for both of our IVs. Note that when constructing a line plot with only one IV, you still need to specify group = 1 in your aes() function. Otherwise the you may get an error about line grouping.

That said, the original interaction plot is useful as well in assessing main effects as well. In this case, to infer whether there might be main effects we can imagine where the means would be if we collapsed our grouped plots (this is exactly what the main effects take a look at). To help with your imagination I’m going to plot our main effect means on the interaction plot. Here the grey-filled triangles represent the the collapsed Smoking Group means indifferent to task. To get these, just imagine finding the midpoint of the two circles in each level of Smoking group. The slope suggests the possibility a main effect.

Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

ℹ Please use `linewidth` instead.

To imagine the collapsed Task means, we can just find the y-values that intersect with the midpoints of each line (note the red line is the mean value of the Driving Simulation group):

The difference in y-intercepts suggests the possibility of a main effect.

So in summary, I’m guessing from plot I’ve got 2 main effects and an interaction. For this week, we are only going to focus on the main effects. In the future, you’ll see that we would need to test for the interaction as well, and if it was present we would need to “deal” with that first.

For now, let’s focus on testing the main effects.

39.6 Running the ANOVA aov() method

Now we can run the using the aov() method as we have previously done with the One-way ANOVA. The new wrinkle is simply adding our additional IV terms to the the formula equation:

\[ y=IV_1+IV_2+...+IV_n \]

where the first and second terms capture our main effects and the third is our interaction.

Using our data in R this formula becomes:

aov_model <- aov(score~Smkgrp+Task,data = dataset_2by2)39.7 Assumption testing

39.7.1 visual inspection

Let’s quickly assess whether the prescribed model satisfies the assumptions for general linear models. Visually we may call upon the performance::check_model(). to get a series of visual inspection checks in a single plot. Note that you encounter errors in your .qmd, you may try to run this directly from your Console

aov_model %>% performance::check_model()

Alternatively, running the above with panel = FALSE allows you to see each plot individually.

aov_model %>% performance::check_model(panel = FALSE)For our purposes this semester, the Homogeneity of Variance and Normality of Residuals plots are most important. Visual inspection suggests that while normality may not be and issue, there is potentially something awry with the homogeneity of variance.

39.7.2 test for assessing normality

If we want to check the normality of residuals we may use the Shapiro-Wilkes Test. We can call it directly using from the performance library:

aov_model %>% performance::check_normality()OK: residuals appear as normally distributed (p = 0.887).Keep in mind that the Shapiro-Wilkes test for normality is notoriously conservative. In week’s past we discussed an alternative based on this method from Kim (2013) “Statistical notes for clinical researchers: assessing normal distribution using skewness and kurtosis”:

# recommendation from Kim (2013)

source("http://tehrandav.is/courses/statistics/custom_functions/skew_kurtosis_z.R")

aov_model$residuals %>% skew_kurtosis_z() upr.ci crit_vals

skew_z 0.72 1.96

kurt_z -0.02 1.96Based on what you see what conclusions might you make?

39.7.3 test for assessing homogeneity of variance

Typically, we can submit our aov_model to car::leveneTest to test for homogeneity. However, for this week only, this will produce an error. This is because the car::leveneTest() demands that the IVs in a factorial ANOVA be crossed. That is, the Levene’s test requires that we include the interaction term in our model. This week, we are NOT looking at interactions, nor am I asking you to include the terms in our model. Because of this, we will us an alternate method, the Bartlet test while noting that in future weeks, and under most practical circumstances this will not be an issue. FWIW, the performance method works as a reliable alternative to car::leveneTest in most circumstances, so you could elect to just use this instead using method = "levene".

aov_model %>% performance::check_homogeneity(method = "bartlet")Warning: Variances differ between groups (Bartlett Test, p = 0.040).Our test says we have a violation (which is consistent with our visual inspection above. Let’s take a look at the 3x time rule. To do this we need to get a feel for the variance within each cell. While we are at it we might as well get the means too. Which leads to the next section (don’t worry we’ll come back to this)

39.8 Getting cell means and sd

To get cell means and standard deviations we can use the rstatix package. This package has a function called get_summary_stats() that will provide us with the means and sd for each cell. To use this function we need to group_by() our data by our IVs. For example, to get the means and sd for each cell we would do the following:

dataset_2by2 %>%

group_by(Task, Smkgrp) %>%

rstatix::get_summary_stats(score)# A tibble: 4 × 15

Task Smkgrp variable n min max median q1 q3 iqr mad mean

<fct> <fct> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Patter… Active score 15 7 12 10 8 10 2 1.48 9.4

2 Patter… Nonsm… score 15 1 22 9 7.5 14 6.5 4.45 9.93

3 Drivin… Active score 15 0 6 2 0 3.5 3.5 2.96 2.33

4 Drivin… Nonsm… score 15 0 17 13 4 15 11 5.93 9.93

# ℹ 3 more variables: sd <dbl>, se <dbl>, ci <dbl>Recalling that we had an issue with heteroscadicity, we can now use the obtained sd values to assess whether our violation is too great. Let’s save our summary table:

cell_summary_table <-

dataset_2by2 %>%

group_by(Task, Smkgrp) %>%

rstatix::get_summary_stats(score)from this we can call the sd values and square them to get our variance:

# varience is sd-squared:

cell_summary_table$sd^2[1] 1.971216 42.497361 5.239521 36.072036Houston we have a problem! The largest variance there is definitely greater than 3x the smallest. What to do? For now nothing, I don’t want you to go down that rabbit hole just yet. But look for info on dealing with this later.

39.9 getting marginal means for main effects

We can also use this function to report the means related to the main effects (irrespective of interaction).

For example, Smoking effect I can re-write the call above, simply dropping Task from the `group_by():

dataset_2by2 %>%

group_by(Smkgrp) %>%

rstatix::get_summary_stats(score)# A tibble: 2 × 14

Smkgrp variable n min max median q1 q3 iqr mad mean sd

<fct> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Active score 30 0 12 6.5 2 9.75 7.75 5.19 5.87 4.05

2 Nonsmok… score 30 0 22 9 5.5 15 9.5 8.90 9.93 6.16

# ℹ 2 more variables: se <dbl>, ci <dbl>and for the Task effect:

dataset_2by2 %>%

group_by(Task) %>%

rstatix::get_summary_stats(score)# A tibble: 2 × 14

Task variable n min max median q1 q3 iqr mad mean sd

<fct> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Pattern… score 30 1 22 9 8 10.8 2.75 1.48 9.67 4.64

2 Driving… score 30 0 17 3.5 2 12 10 5.19 6.13 5.91

# ℹ 2 more variables: se <dbl>, ci <dbl>Note that If you are reporting means related to the main effects, you need to report these marginal means!

HOWEVER… this data has an interaction, and remember what I said above, if the data has an interaction, you need to deal with that first. We’ll show how to work with data that has interactions next week.

39.10 Testing the ANOVA

Ok. Now let’s look at our ANOVA table. Note that instead of the full print out, I’m asking sjstats to only give me the values I’m interested in: the term (effect), df, (F) statistic, p-value, and effect size, in this case partial.eta

aov_model %>% anova_test(effect.size = "ges")ANOVA Table (type II tests)

Effect DFn DFd F p p<.05 ges

1 Lecture 2 32 3.779 3.40e-02 * 0.191

2 Presentation 1 32 22.670 3.97e-05 * 0.415You’ll note that both main effects are significant, meaning that we would need to perform pairwise, post-hoc comparisions for each effect. BUT you’ll also note that each of our factors ONLY HAD TWO LEVELS, meaning that the F-test in the ANOVA table IS a pairwise comparison. In cases like this, when the effect only has 2 levels, we are done (any post hoc analysis would be redundant).

Assuming you feel comfortable with everything in this walkthrough, let’s proceed to the next, which includes cases where our factors have three (or more) levels.