pacman::p_load(tidyverse,

cowplot,

glue) # for gluing strings of text together32 Power, Effect sizes, and Significance testing

Both power and effect size are defined as related to estimates of \(\mu\) & \(\sigma\)—means and standard deviation units. However, as we saw two weeks ago with our t-test, significance is evaluated in terms of standard error units. As we’ve often noted and reiterated above standard error is influenced by the size of your sample. In comparison, for any given sample the mean and standard deviation that you observe are entirely by chance. Simply put, the values that we use to estimate effect size are independent of the values that we use to test for significance. This is why even though an effect might be statistically significant, it may not be meaningfully / clinically significant. We may have over-amplified a relatively weak original signal by over-powering our study (e.g., getting way more participants than necessary).

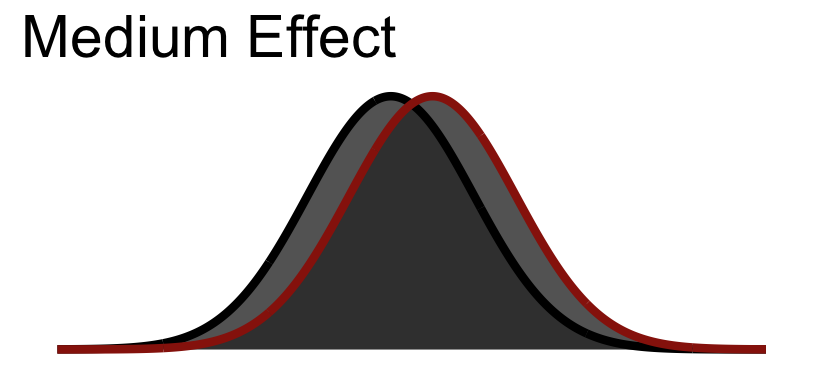

Let’s look at an example assuming a medium effect size. Imagine this is whats going on at the level of the population.

Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

ℹ Please use `linewidth` instead.

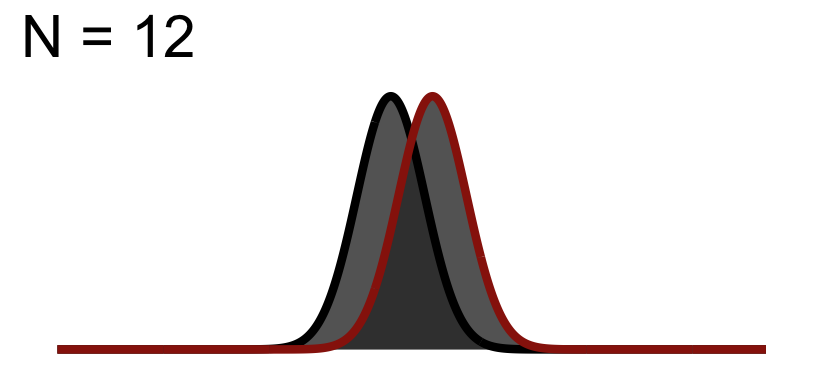

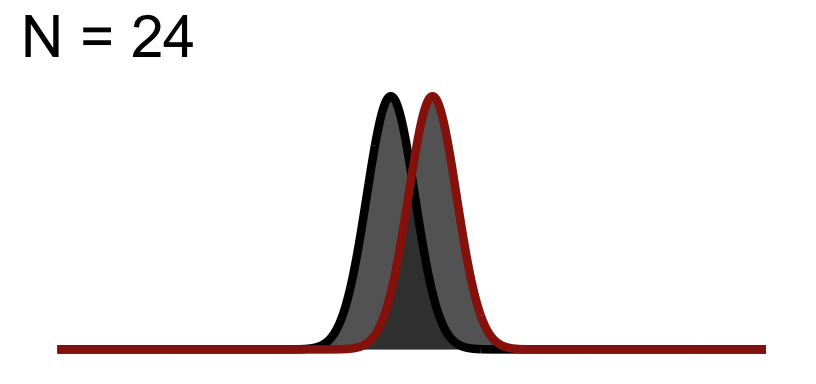

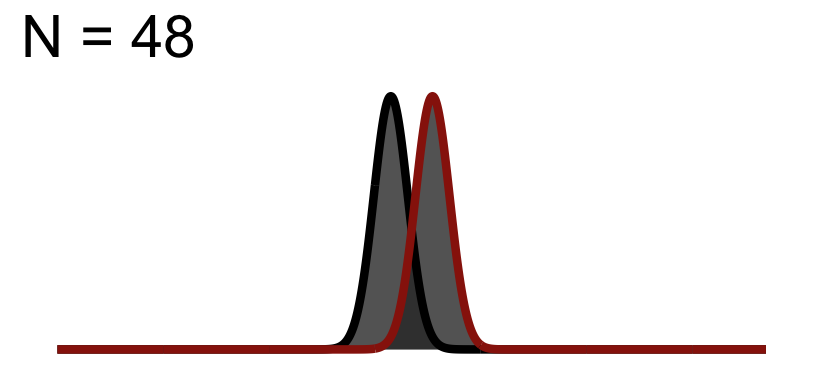

Now imagine going out an collecting data from this population given samples of different sizes. Note that the width of the curves here capture standard error.

What do you notice about the figures below?

32.1 Significance Test when N = 12

32.2 Significance Test when N = 24

32.3 Significance Test when N = 48

You will notice the area of \(1-\beta\) increases because as we add to the sample size, the curve (noise) gets thinner (SEM), but the distance between them does not change! This effect size is theoretically independent of significance testing as its simply based on the mean difference / standard deviation. If you know the true standard deviation (\(\sigma\)) then this is a true statement. However, we never know the true standard deviation so we approximate it based on sample. So our observed estimate of effect size from experimental data is another guess based on the variation due to chance. Because of this we cannot tie effect size to p-value.

To demonstrate this, let’s revisit the Hand et al data this time reducing the size of the effect. Again regarding Hand:

Hand, et al., 1994, reported on family therapy as a treatment for anorexia. There were 17 girls in this experiment, and they were weighed before and after treatment. The weights of the girls, in pounds, is provided in the data below:

anorexia_data <- read_delim("https://www.uvm.edu/~statdhtx/methods8/DataFiles/Tab7-3.dat",

"\t", escape_double = FALSE, trim_ws = TRUE)Rows: 17 Columns: 3

── Column specification ────────────────────────────────────────────────────────

Delimiter: "\t"

chr (1): ID

dbl (2): Before, After

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.# reducing the effect (minimizing difference)

anorexia_data <- anorexia_data %>%

mutate(After = After - 6)

# putting in long format

anorexia_data <- pivot_longer(data = anorexia_data,cols = c(Before,After),names_to = "Treatment",values_to = "Weight")So what is known: we have 17 total participants from (hypothetically) the same population that are measured twice (once Before treatment, and once After treatment). Based upon the experimental question we need to run a paired-sample (matched-sample) test.

t.test(Weight~Treatment, data=anorexia_data, paired=T)

Paired t-test

data: Weight by Treatment

t = 0.72773, df = 16, p-value = 0.4773

alternative hypothesis: true mean difference is not equal to 0

95 percent confidence interval:

-2.419429 4.948840

sample estimates:

mean difference

1.264706 pacman::p_load(effectsize)

effectsize::cohens_d(Weight~Treatment, data=anorexia_data, correction = T)Cohen's d | 95% CI

-------------------------

0.18 | [-0.49, 0.85]

- Estimated using pooled SD.So on first pass we’ve got a null effect (\(p>.05\)) and our effect size is small (0.16). Let’s image that instead of 17 participants we had 170:

anorexia_data_170 <- do.call("rbind", replicate(10, anorexia_data, simplify = FALSE))t.test(Weight~Treatment, data=anorexia_data_170, paired=T)

Paired t-test

data: Weight by Treatment

t = 2.3651, df = 169, p-value = 0.01916

alternative hypothesis: true mean difference is not equal to 0

95 percent confidence interval:

0.2090925 2.3203193

sample estimates:

mean difference

1.264706 pacman::p_load(effectsize)

effectsize::cohens_d(Weight~Treatment, data=anorexia_data, correction = TRUE) Cohen's d | 95% CI

-------------------------

0.18 | [-0.49, 0.85]

- Estimated using pooled SD.We see our effect size remains unchanged, but now our test is significant!