pacman::p_load(tidyverse, car, SimDesign, regclass)19 The normality assumption

In the previous walkthough we talked about the Independence Assumption and talked about how the entire enterprise of NHST is build upon this assumption. The nasty thing about the Independence Assumption is that its baked in… there’s really no was to test for true independence or our predictors (nor are we ever likely for this to be completely true). Sure, we can test the degree to which a given predictor might correlate with other predictors, but this leaves out the possibility of non-obvious, non-measured influences to our observations. For now, we just happily restrict ourselves to this limited definition of independence and move happily along.

In this walkthrough we’ll discuss the normality assumption. First, let’s load in the following packages:

19.1 Normality Assumption

In class we discussed that one of the first and important steps when taking a look at your data is to assess whether the data conform to the assumptions of your analysis of choice. For most parametric tests (e.g., regression, t-tests, ANOVA families), one of the key assumptions is that scores of your dependent variable(s) approximate some known distribution. The distribution that gets the most play in this course is the normal distribution.

In the interest of having a unified resource, I’m putting together a brief vignette highlighting methods for assessing whether your data is normal or not. I stress the word “assess” and not “test” for reasons that will be made apparent below. My best advice is to take several tools to make the decision of whether your data is normal or not.

In brief there are three “tools” for assessment:

- plotting your data using histograms and QQ-plots

- evaluating measures of skew and kurtosis

- significance tests for normality

It may be temping to believe that #3, significance testing, would be a magic bullet. However, we need to be cautious with tests like Kolmorogorov and Shapiro-Wilks. In my own practice, I typically use them last in the case of extreme ambiguity, but more often to confirm what I already believe (journals like “tests to confirm”).

Before reaching your conclusion you should employ multiple methods!!

19.2 Why normality?

Before jumping in let’s take a look at a brief example highlighting why the normality assumption is critical for the models that we will be discussing in the upcoming weeks. To build this intuition let’s return to our population of TJD-HS students.

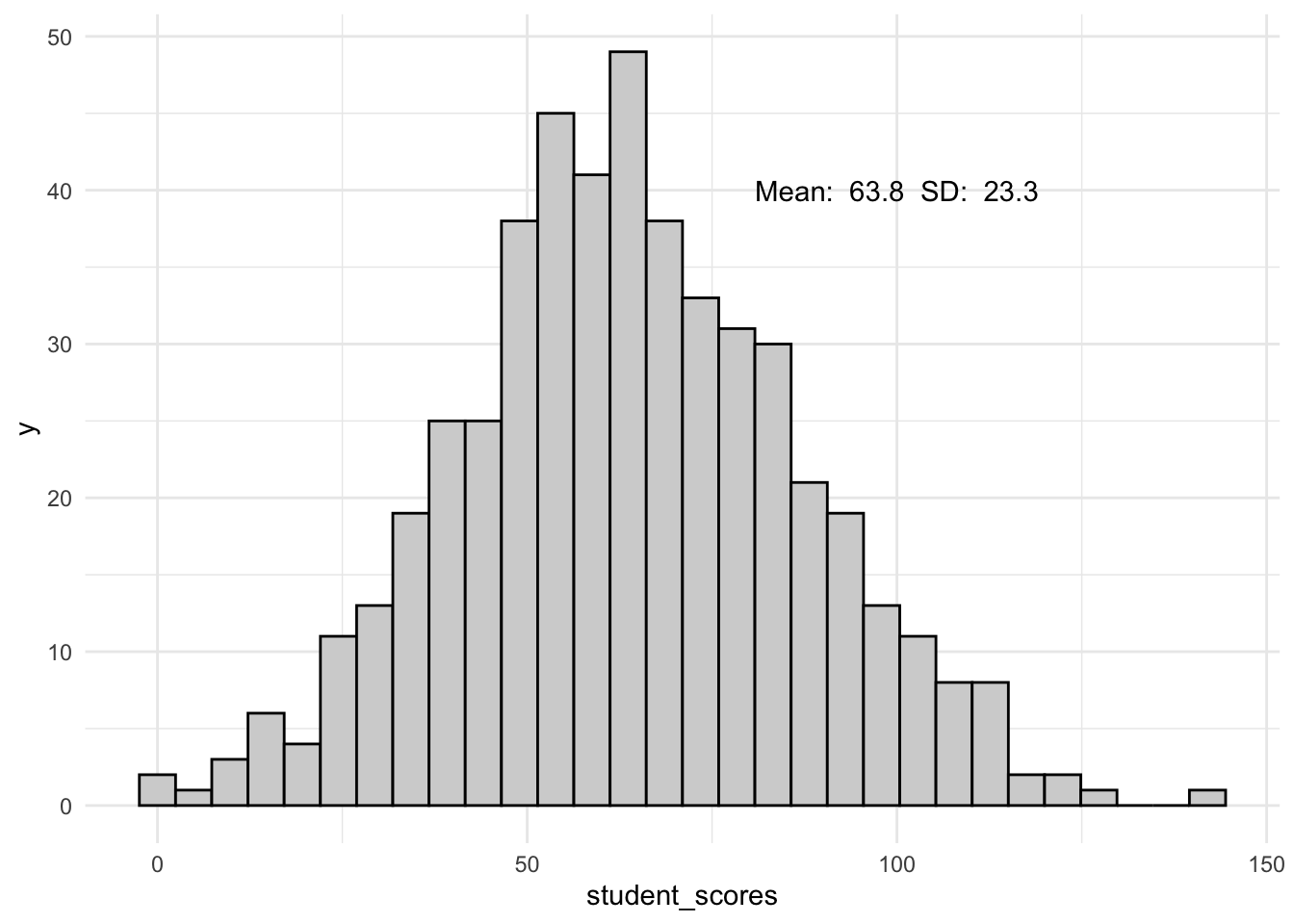

set.seed(123)

student_scores <- rnorm(n = 500, mean = 63, sd = 24) %>% round() # scores

student_id <- sample(x = 100000:999999, size = 500) #student ids

tjd_hs_data <- tibble(student_id, student_scores)

ggplot(tjd_hs_data, aes(x = student_scores)) +

geom_histogram(col = "black", fill = "lightgray") +

theme_minimal() +

annotate("text",

x = 100, y = 40,

label = paste("Mean: ",

mean(tjd_hs_data$student_scores) %>% round(1),

" SD: ",

sd(tjd_hs_data$student_scores) %>% round(1)

)

) `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

I’m getting a little bit ahead of myself, but when dealing with populations we often turn to the \(Z\) distribution to assess significance. In this simple example we might convert every student’s score to a \(Z\)-score to assess their quantile rank.

Let’s add some scores to our existing tjd_hs_data:

tjd_hs_data <-

tjd_hs_data %>%

mutate(z_scores = scale(student_scores)[,1])

# have to add the [,1] to avoid annoying naming quirkAssuming the standard distribution, the probability of students falling between a \(Z\) score of 0 and 0.5 is:

pnorm(0.5) - pnorm (0)[1] 0.1914625Given that we have 500 students, that means about 100 students should be in this range. Let’s check:

tjd_hs_data %>%

filter(z_scores >= 0 & z_scores <= 0.5) %>%

nrow()[1] 103Looks like we get 103. All seems well.

19.3 What if the data is not normal?

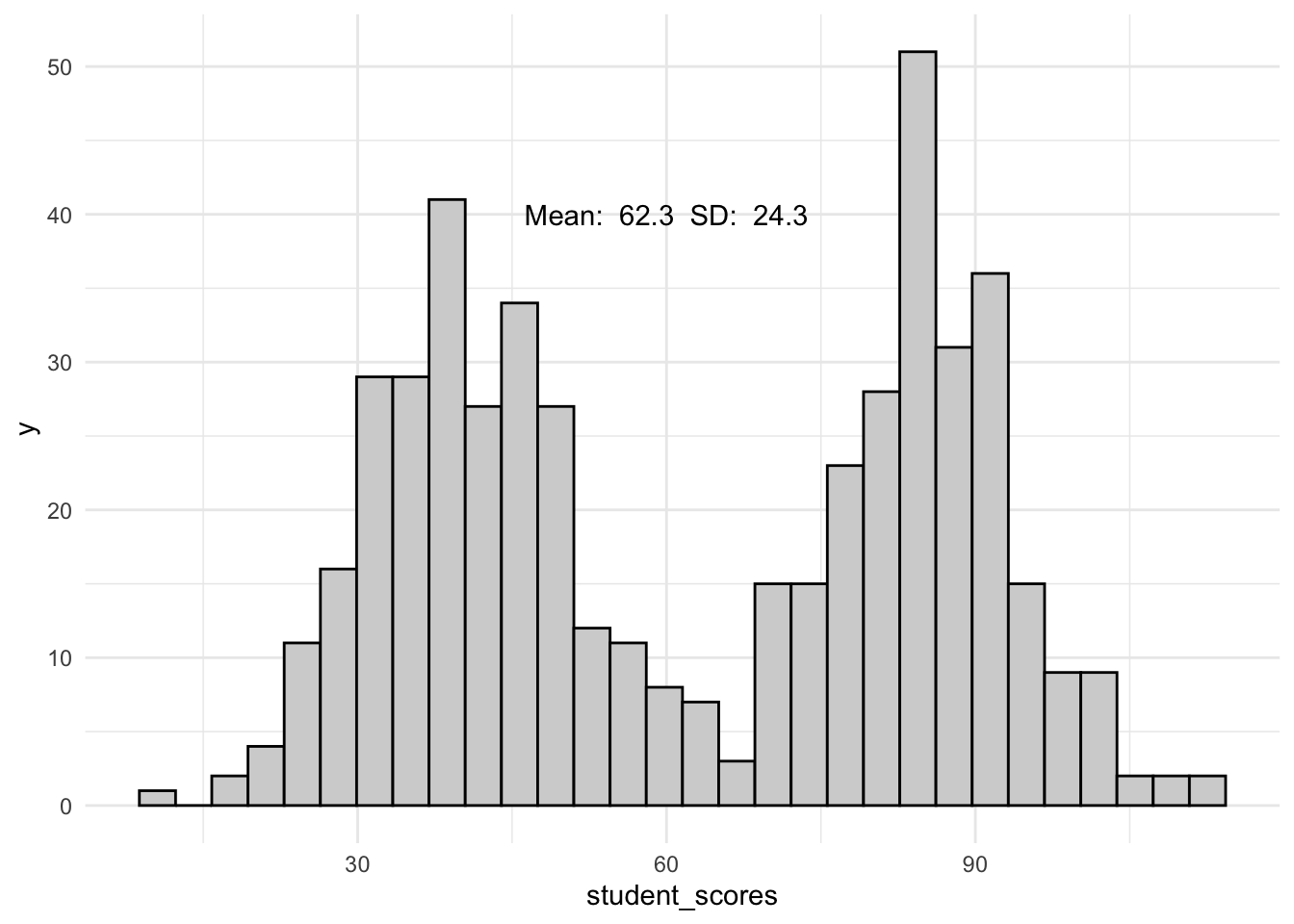

TJD-HS has a fierce crosstown rivalaly with Binary High (which is an awesome name for a band). Let’s look at their scores.

# Generating two normal distributions

# One centered at 40 with sd=10, and another centered at 85 with sd=10

set.seed(12)

group1 <- rnorm(250, mean=40, sd=10) %>% round()

group2 <- rnorm(250, mean=85, sd=10) %>% round()

# Combine the two groups to create a bimodal distribution

student_scores <- c(group1, group2)

student_id <- sample(x = 100000:999999, size = 500) #student ids

bin_hs_data <- tibble(student_id, student_scores)

ggplot(bin_hs_data, aes(x = student_scores)) +

geom_histogram(col = "black", fill = "lightgray") +

theme_minimal() +

annotate("text",

x = 60, y = 40,

label = paste("Mean: ",

mean(bin_hs_data$student_scores) %>% round(1),

" SD: ",

sd(bin_hs_data$student_scores) %>% round(1)

)

) `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

Now let’s add the \(Z\) scores:

bin_hs_data <-

bin_hs_data %>%

mutate(z_scores = scale(student_scores)[,1])

# have to add the [,1] to avoid annoying naming quirkAs before, according to the standard distribution, we should find about 100 students between \(Z\) = 0 and \(Z\) = 0.5. However, number of students from Binary High in this range is:

bin_hs_data %>%

filter(z_scores >= 0 & z_scores <= 0.5) %>%

nrow()[1] 32Only 32! What does this mean? We’ll any statistic / test that involves interpreting \(Z\) scores (or their close derivatives) is going to lead us in the WRONG DIRECTION!

19.4 Assessing normality

So this is why it’s important… on to how to check for normality. Admittedly, I’m going to give you the round-about, more detailed ways of assessing normality, before in a later walthrough discussing the methods that I use (and I really think you should use too). For now, follow along for examples, but PLEASE use the shortcut in your own analysis (spoiler alert: the performace package is your friend).

19.4.1 Visual assessment tools

I can’t say it enough, one of the first things to do is plot your data. On of the first plots you should generate is a plot of the distribution of your variables as they fit within your design (more on this in Part 2). You friends here are the histogram plot and the QQ-plot.

19.4.1.1 Histogram plot

A histogram plot is simply a plot of the distribution of score. Typically this takes the form of the number, or raw count) of scores within a number of preset bin (sizes), but can also take the form of the density, or proportion of scores. You’ll need to create a density plot to overlay with a normal curve (a least using ggplot). I’ll revisit the basics here, but the last 2 plot examples here are most useful to you.

19.4.1.1.1 What to look for

The degree to which your data are (1) asymmetrical around the mean and median and (2) fits with a theoretical normal curve with the same mean and sd parameters.

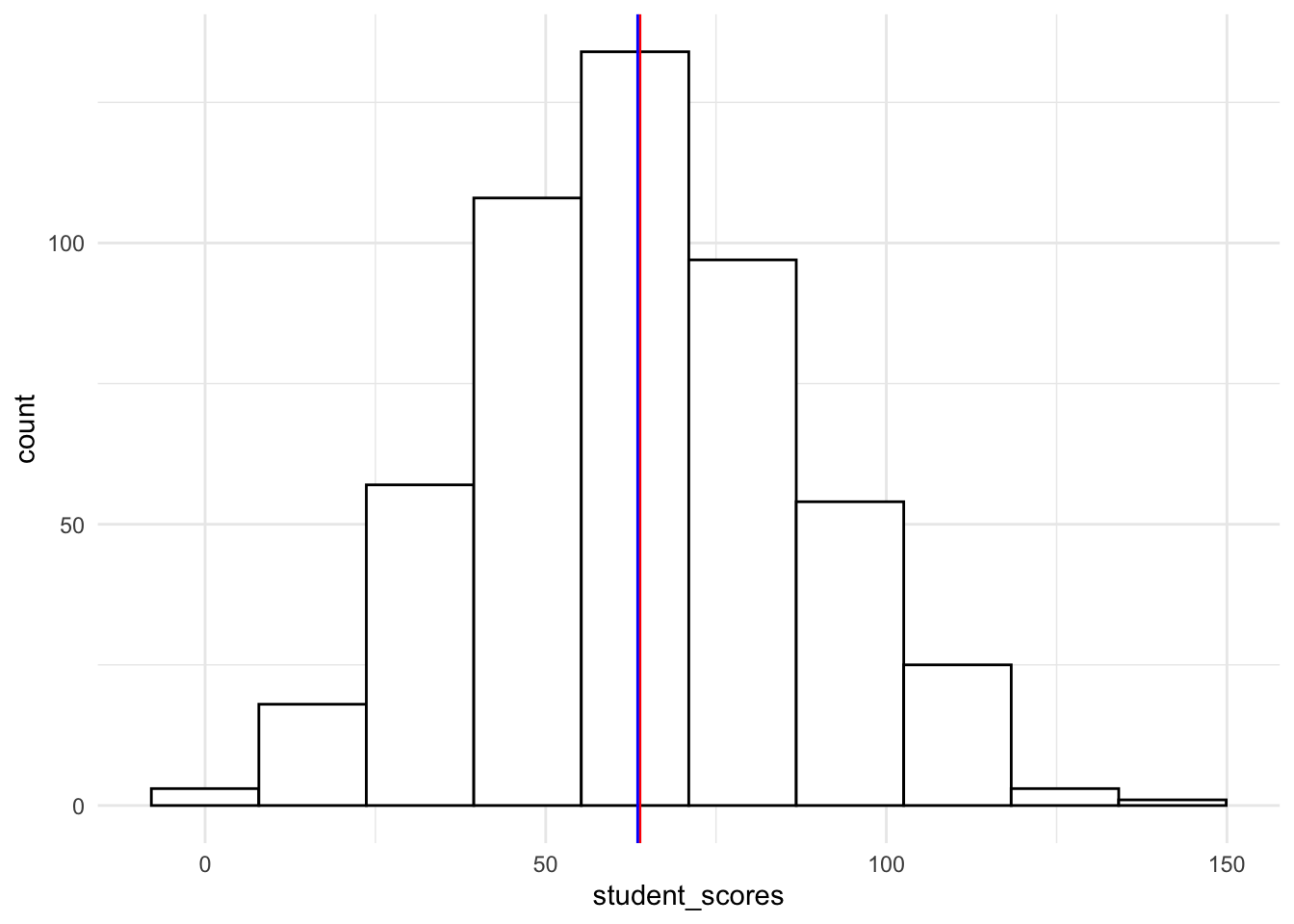

19.4.1.1.2 Basic histogram (raw counts)

Using ggplot we’ll plot a histogram of the counts of scores, placing vertical lines at the mean and median scores:

ggplot(tjd_hs_data, aes(x = student_scores)) +

geom_histogram(bins = 10,

fill = "white",

color = "black") +

geom_vline(xintercept = mean(tjd_hs_data$student_scores), color="red") +

geom_vline(xintercept = median(tjd_hs_data$student_scores), color="blue") +

theme_minimal()

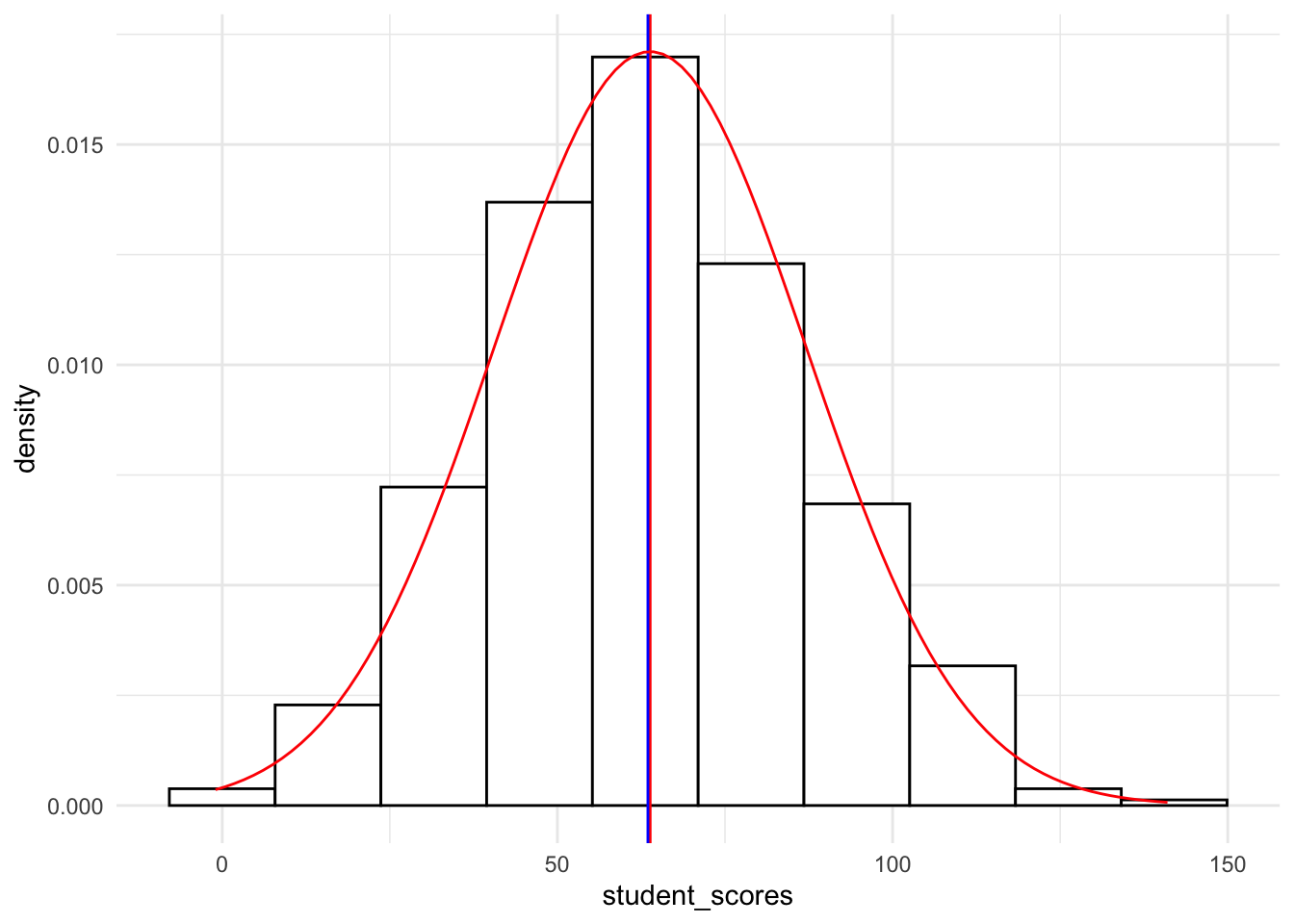

A good rule of thumb is to overlay the plot with a normal curve (theoretical distribution). The easiest way to do this is to transform your count histogram into a proportion, or density, histogram first:

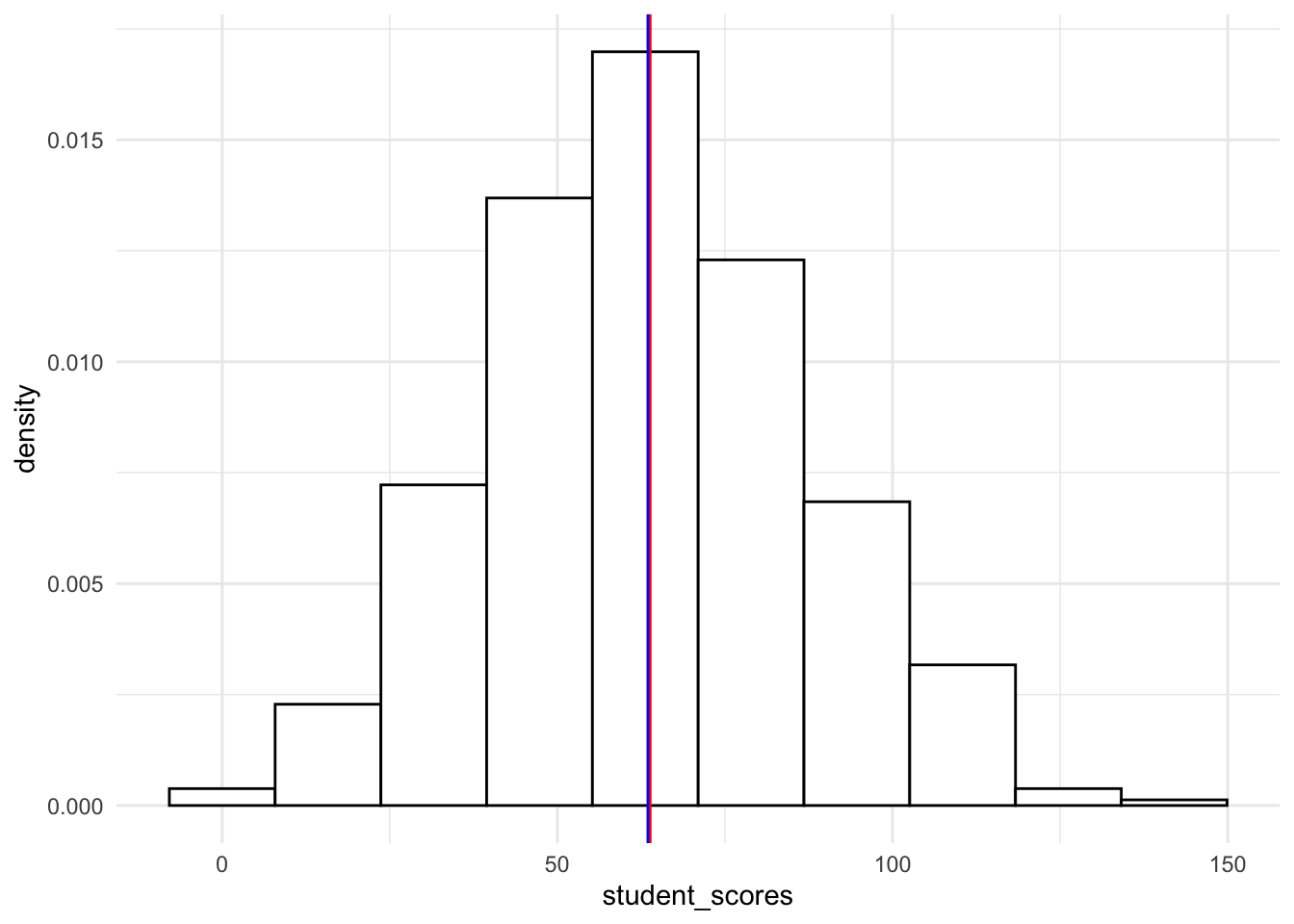

19.4.1.1.3 Density histogram (proportions)

To get a histogram with proportions instead of raw values, you simply add aes(y=..density..) to geom_histogram()

ggplot(tjd_hs_data, aes(x = student_scores)) +

geom_histogram(bins = 10,

fill = "white",

color = "black",

aes(y=..density..) # turns raw scores to proportions

) +

geom_vline(xintercept = mean(tjd_hs_data$student_scores), color="red") +

geom_vline(xintercept = median(tjd_hs_data$student_scores), color="blue") +

theme_minimal()Warning: The dot-dot notation (`..density..`) was deprecated in ggplot2 3.4.0.

ℹ Please use `after_stat(density)` instead.

19.4.1.1.4 Histogram with normal curve overlay

To overlay a normal curve we need to add stat_function to our ggplot. Note that the arguments are essentially running a dnorm with a unit mean and sd from our scores. If we are simply adding the normal curve to a density plot we add the following:

# create a density plot

ggplot(tjd_hs_data, aes(x = student_scores)) +

geom_histogram(bins = 10,

fill = "white",

color = "black",

aes(y=..density..) # turns raw scores to proportions

) +

geom_vline(xintercept = mean(tjd_hs_data$student_scores), color="red") +

geom_vline(xintercept = median(tjd_hs_data$student_scores), color="blue") +

stat_function(fun = dnorm, # generate theoretical norm data

color = "red", # color the line red

args=list(

mean = mean(tjd_hs_data$student_scores,na.rm = T), # build around mean

sd = sd(tjd_hs_data$student_scores,na.rm = T) # st dev parameter

)

) +

theme_minimal()

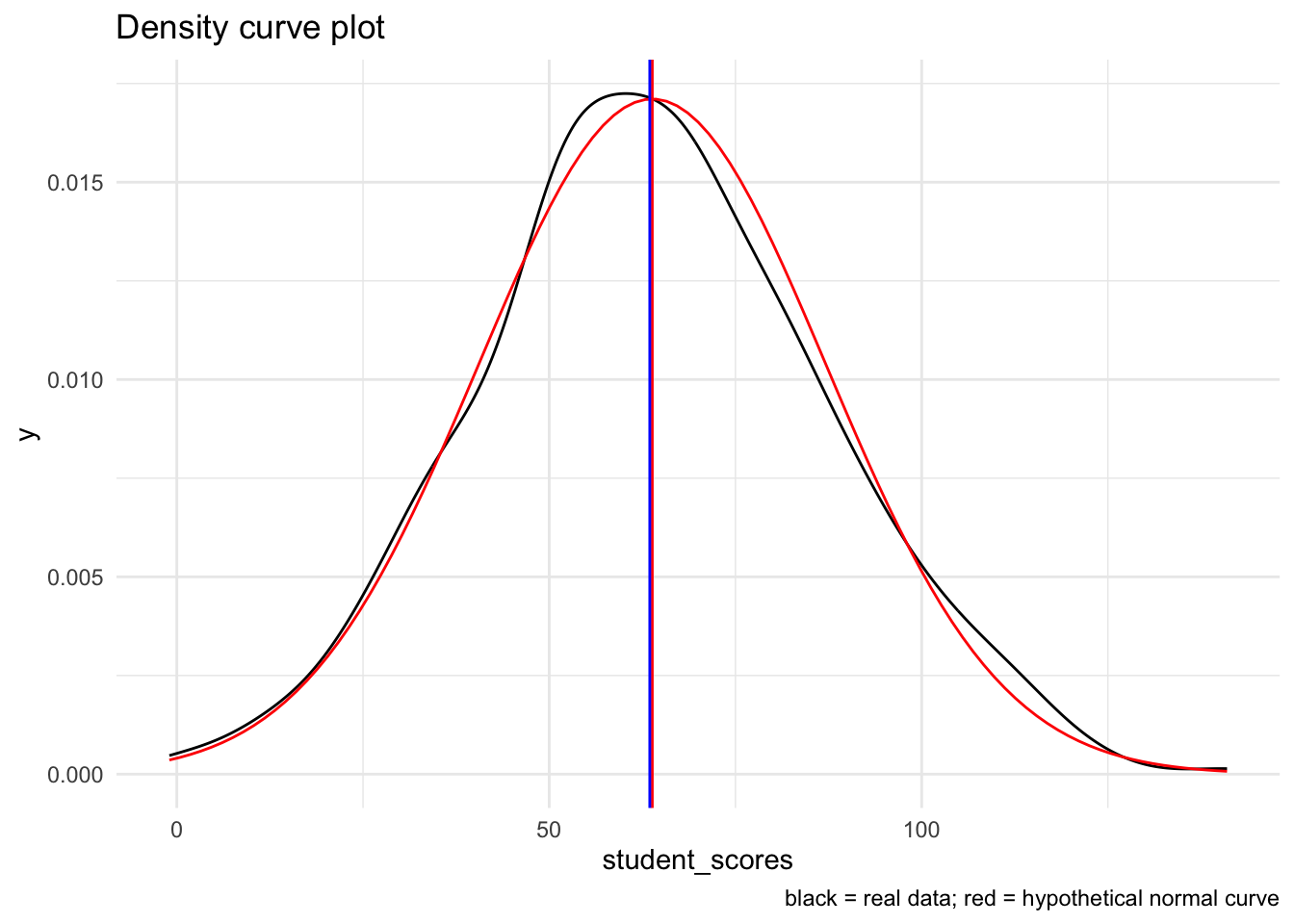

19.4.1.1.5 Density curve with with normal curve overlay

Note that it may actually be simpler to simply create a density curve of the data and overlay it with a normal curve. The resulting plot is a smooth-function interpretation of the distribution of the data:

# create a raw plot

ggplot(tjd_hs_data, aes(x = student_scores)) +

geom_density() +

stat_function(fun = dnorm, # generate theoretical norm data

color = "red", # color the line red

args=list(

mean = mean(tjd_hs_data$student_scores,na.rm = T), # build around mean

sd = sd(tjd_hs_data$student_scores,na.rm = T) # st dev parameter

)

) +

geom_vline(xintercept = mean(tjd_hs_data$student_scores), color="red") +

geom_vline(xintercept = median(tjd_hs_data$student_scores), color="blue") +

labs(

title = "Density curve plot",

caption = "black = real data; red = hypothetical normal curve"

) +

theme_minimal()

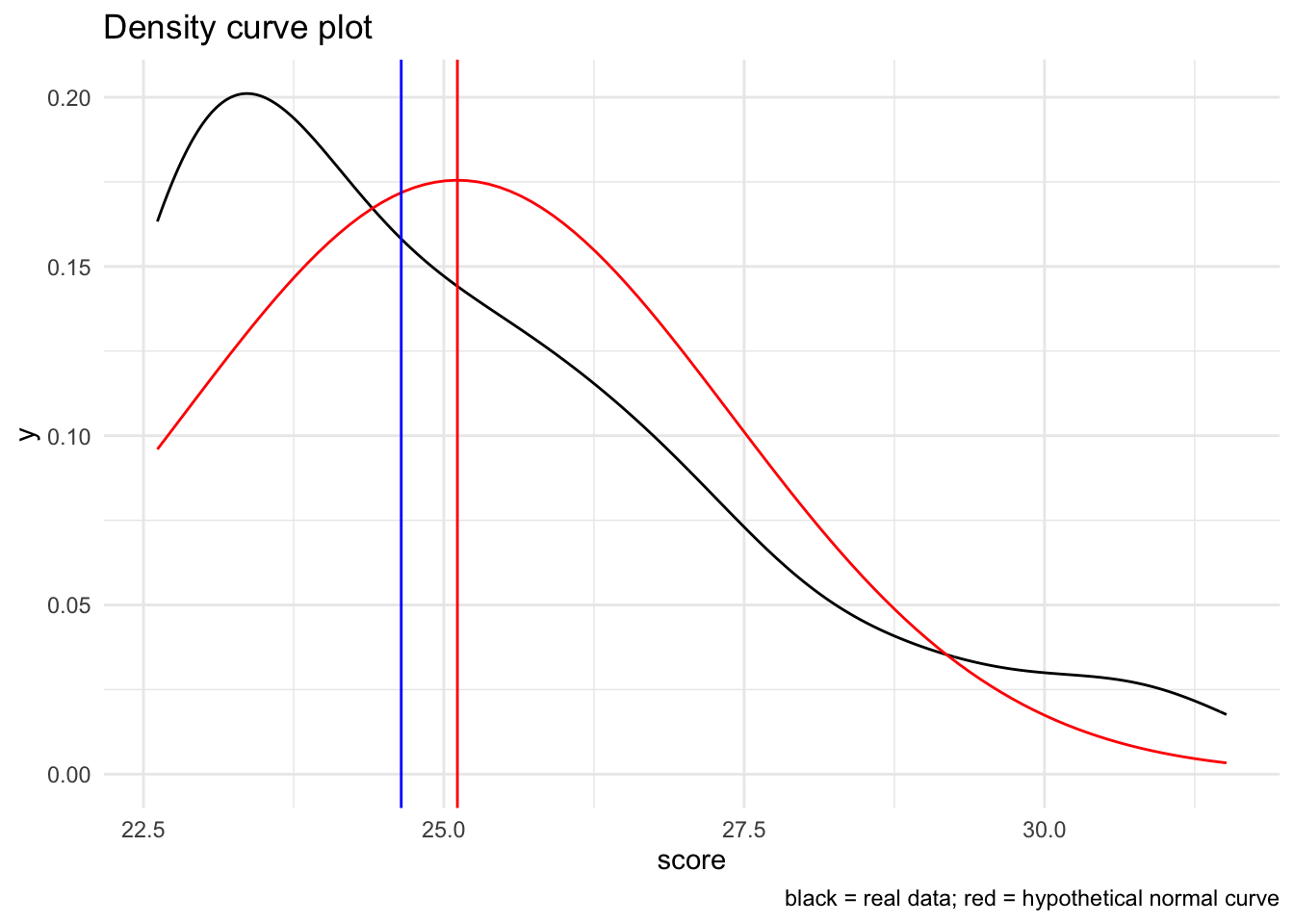

19.4.1.1.6 Non-normal example

I’m going to recreate the density histogram using the skewed_sample to give you a feel for what this looks like.

Recall that we generated the skewed sample using:

skewed_sample <-

tibble("observation" = 1:100,

"score" = SimDesign::rValeMaurelli(100, mean=25, sigma=5, skew=1.5,

kurt=3) %>% as.vector()

)Visually:

# create a raw plot

ggplot(skewed_sample, aes(x = score)) +

geom_density() +

stat_function(fun = dnorm, # generate theoretical norm data

color = "red", # color the line red

args=list(

mean = mean(skewed_sample$score,na.rm = T), # build around mean

sd = sd(skewed_sample$score,na.rm = T) # st dev parameter

)

) +

geom_vline(xintercept = mean(skewed_sample$score), color="red") +

geom_vline(xintercept = median(skewed_sample$score), color="blue") +

labs(

title = "Density curve plot",

caption = "black = real data; red = hypothetical normal curve"

) +

theme_minimal()

Again, notice how the data are both shifted and do not cleanly fit with the normal curve.

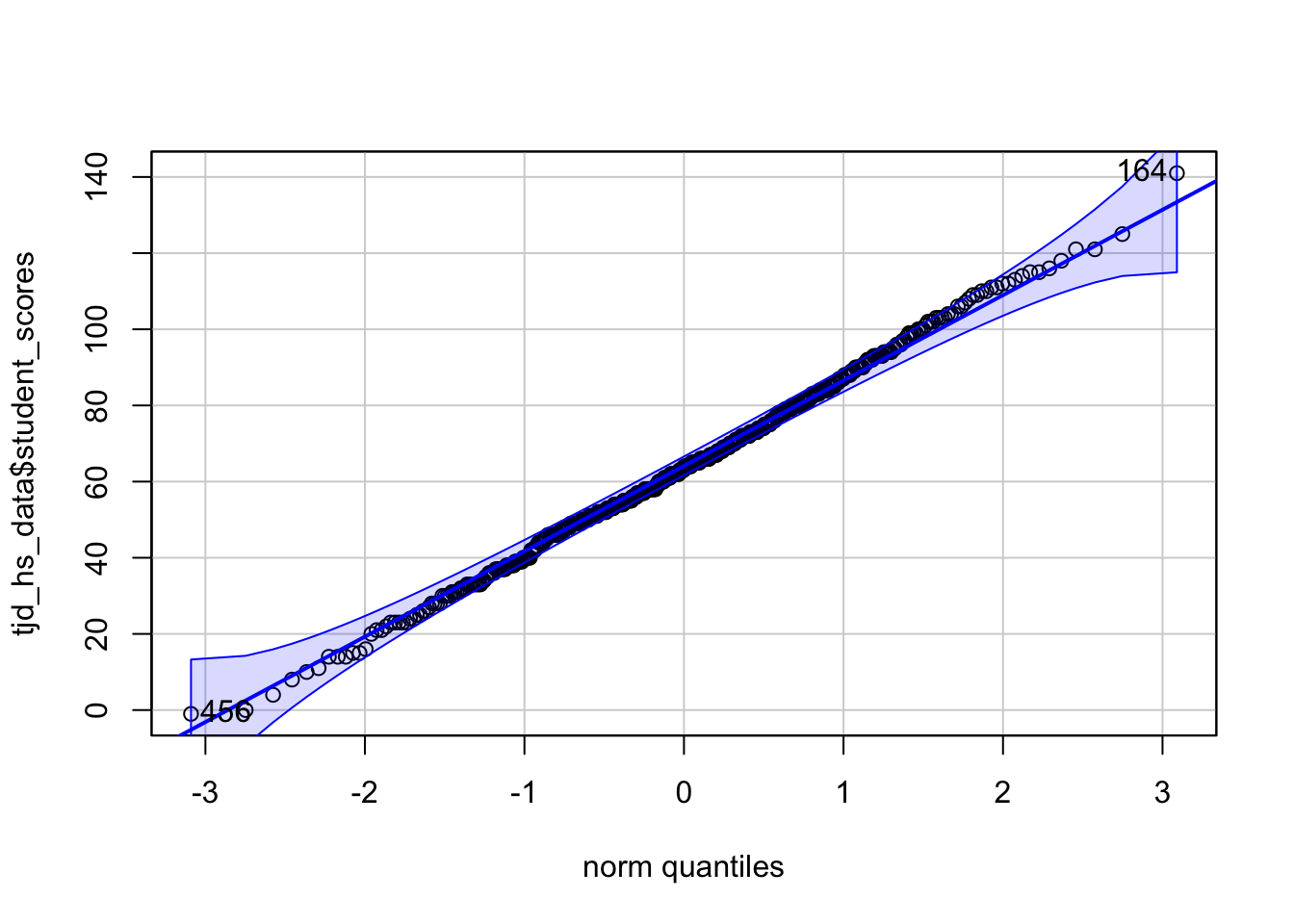

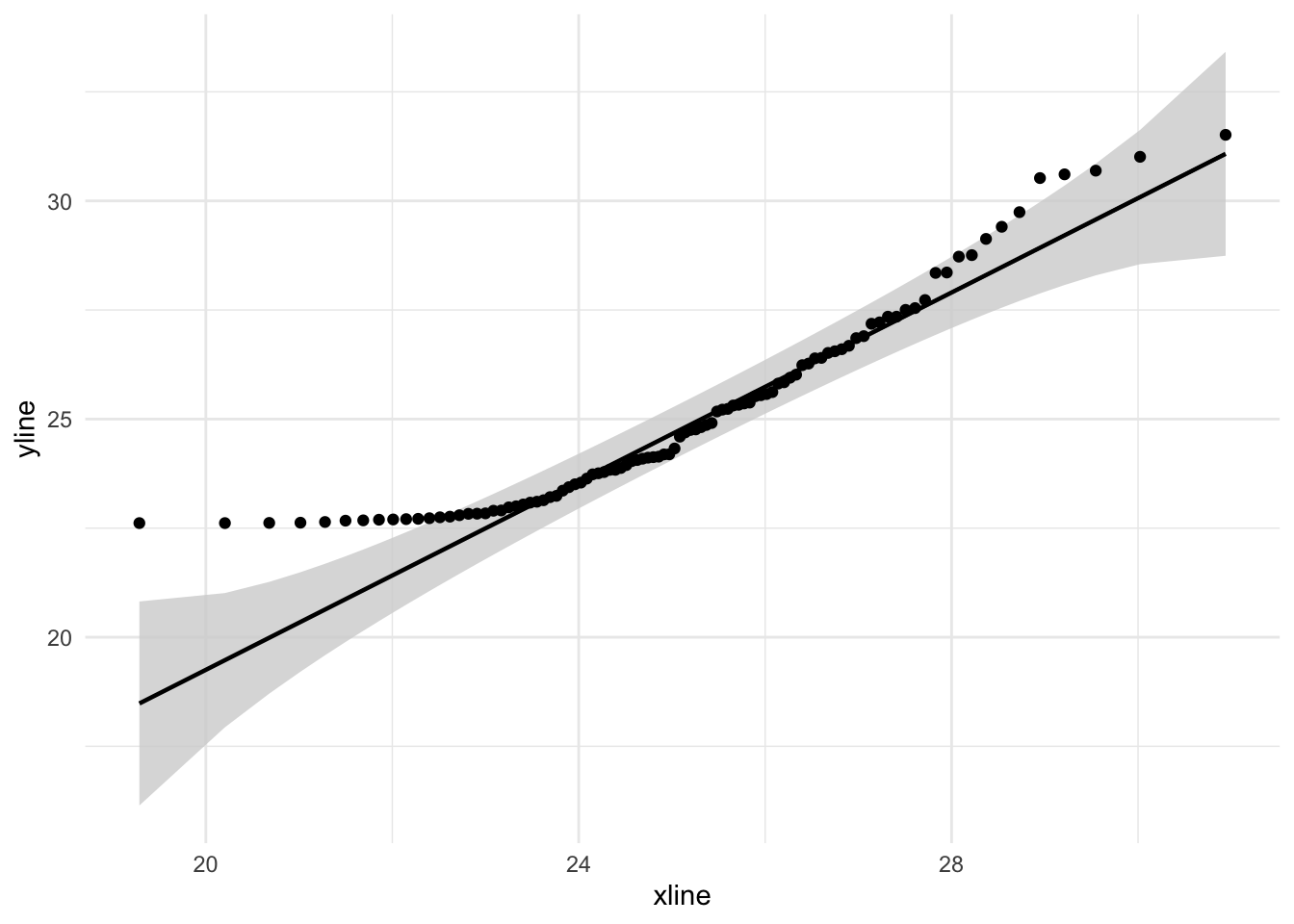

19.4.1.2 Quantile-quantile plots

Normality can also be visually assessed using a quantile-quantile plot. A quantile-quantile plot compares raw scores with theoretical scores that assume a normal distribution. If both are roughly equal then the result should be that the points fall along a line of equality (qq-line). In a quantile-quantile plot deviations from the line indicate deviations from normality.

19.4.1.2.1 What to look for

A large number of significant deviations (outside the bands) is a hint that the data is non-normal. This link on CrossValidated is pretty good in terms of interpreting Q-Q plots. Note that for more stringent criteria one can take a look at the correlation between the raw sample data and the theoretical normed data.

19.4.1.2.2 Basic qqplot

There are several ways to put together a QQ-plot; my preferred are (1) using the car::qqPlot function when just taking a quick look; (2) using ggplot when I need something “publication worthy” or when I’m conducting more complex analysis. A third method that I’ll used here regclass::qq is useful as a learning example.

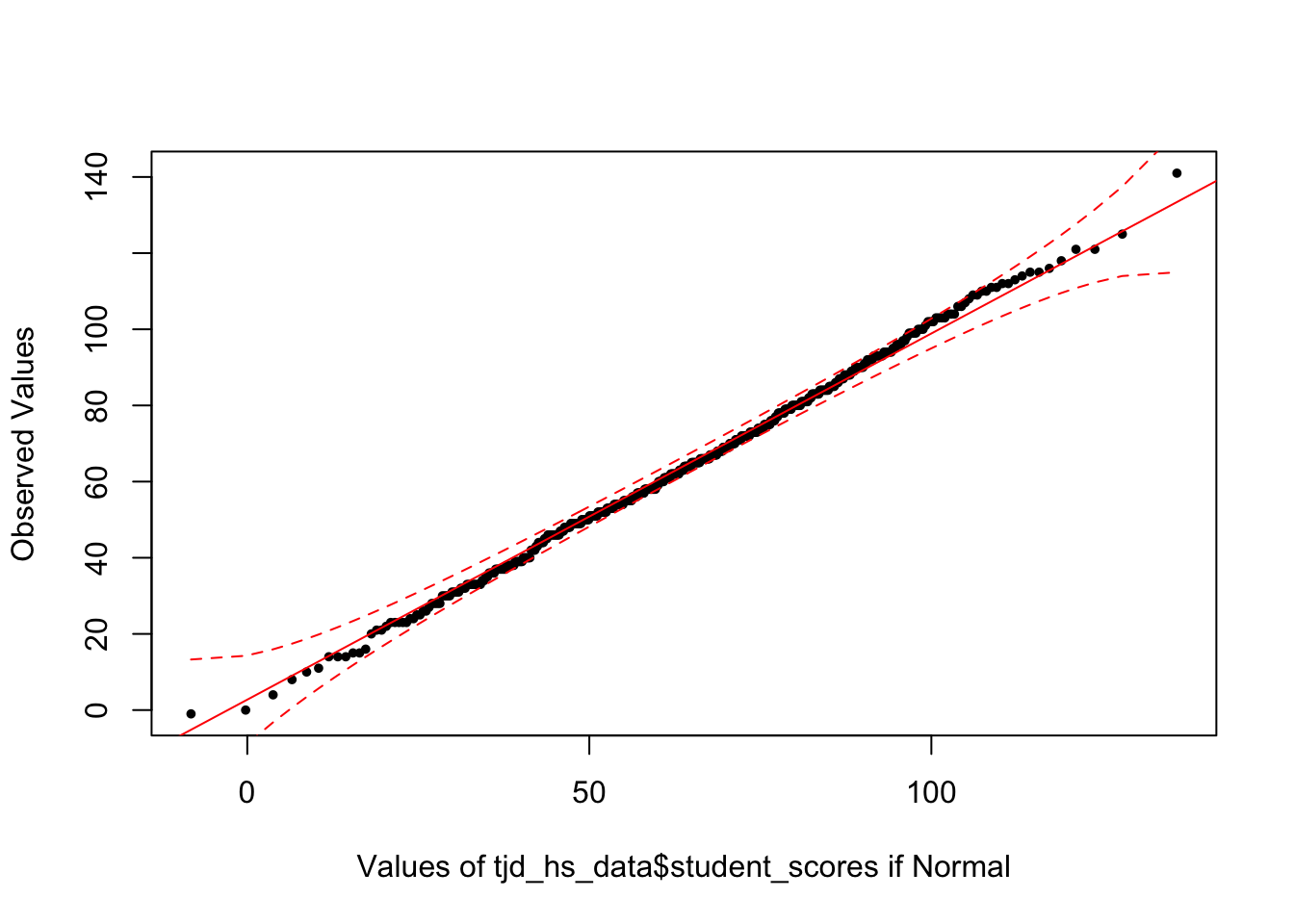

First, lets take a look at tjd_hs_data$score using car::qqPlot:

library(car)

car::qqPlot(tjd_hs_data$student_scores, distribution="norm")

[1] 164 456This produces a QQ-plot with a confidence envelope based on the SEs of the order statistics of an independent random sample from the comparison distribution (see Fox 2016, Applied Regression Analysis and Generalized Linear Models, Chapter 12). The highlighted observations, in this case 12 and 92 are the 2 scores with the largest model residuals—in this case deviation from the mean, but we can through linear model outputs into this function (see Week 6 of class: Modeling with continuous predictors / simple regression)

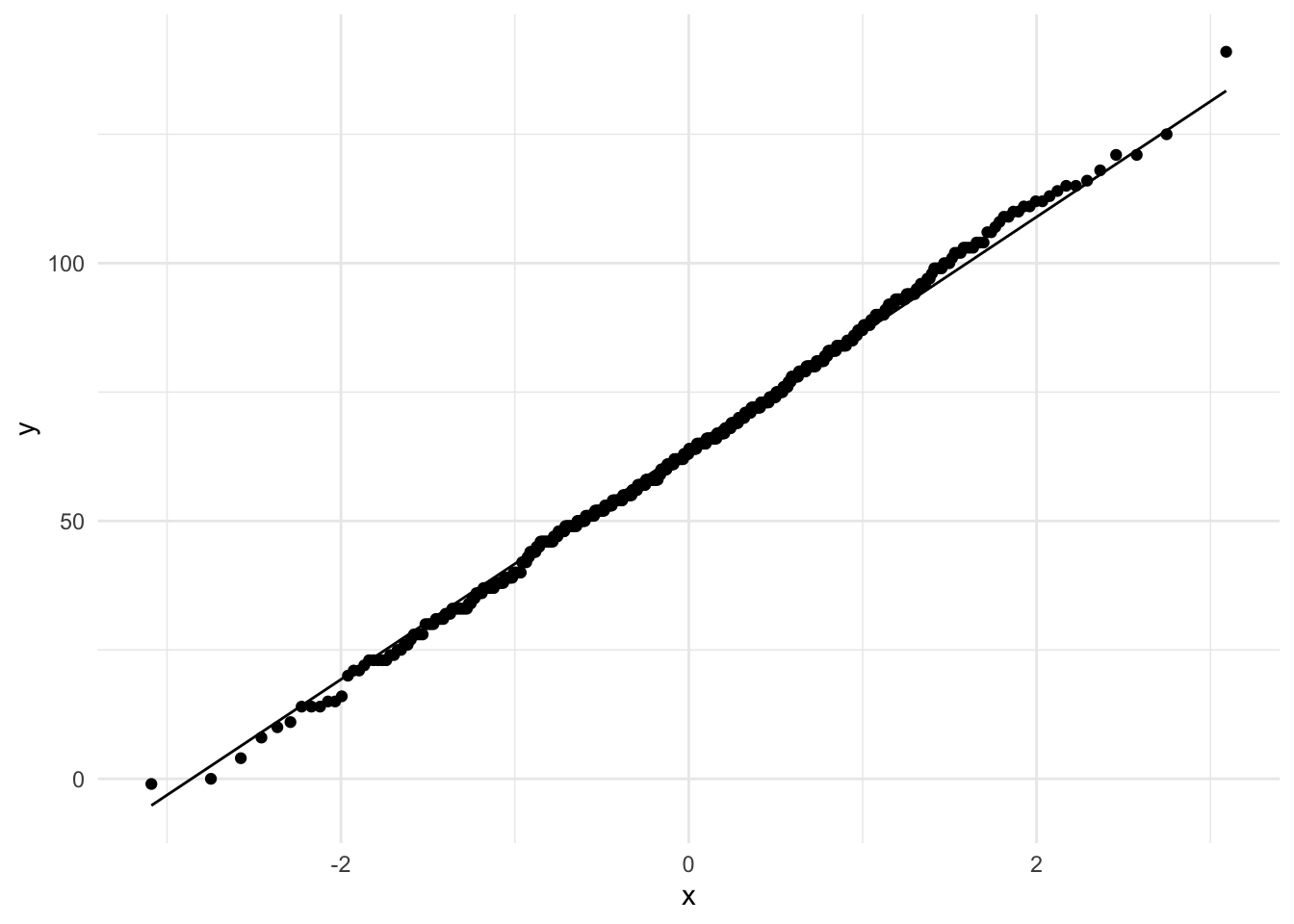

The x-values are the normed quantiles, while the y-values are our actual raw scores. The normed quantiles values are essentially normed stand-ins for “what the raw values should be if the data was normal. To demonstrate this let’s use the regclass:qq function:

library(regclass)

regclass::qq(tjd_hs_data$student_scores)

As you can see this is the exact same plot. We typically focus on the quantile values as they allow us to better compare data across different distributions.

Taking a look both our tjd_hs_data and skewed_sample.

Finally we can use ggplot to construct better looking plots.

ggplot(tjd_hs_data, aes(sample=student_scores)) +

geom_qq() + geom_qq_line() +

theme_minimal()

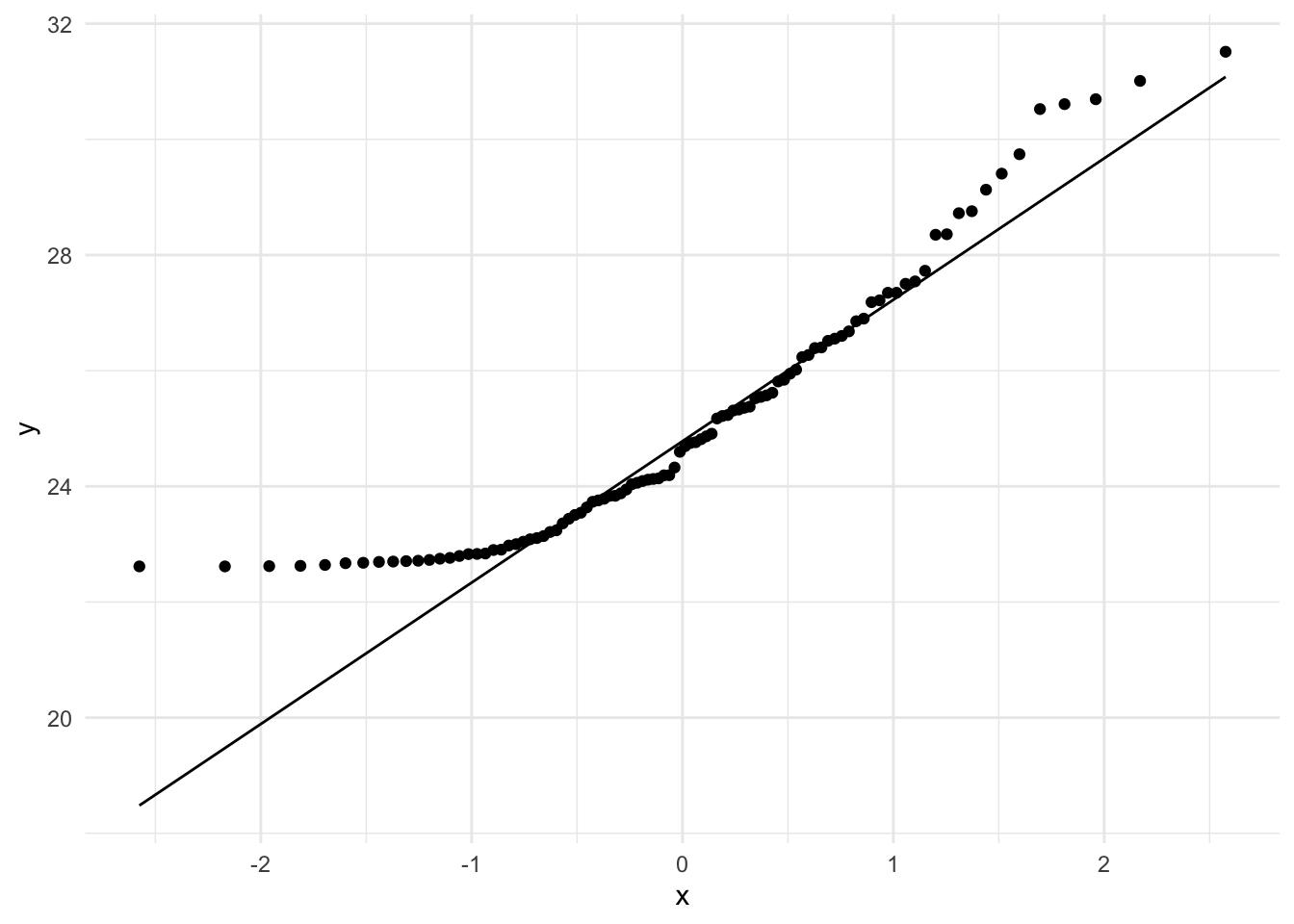

ggplot(skewed_sample, aes(sample=score)) +

geom_qq() + geom_qq_line() +

theme_minimal()

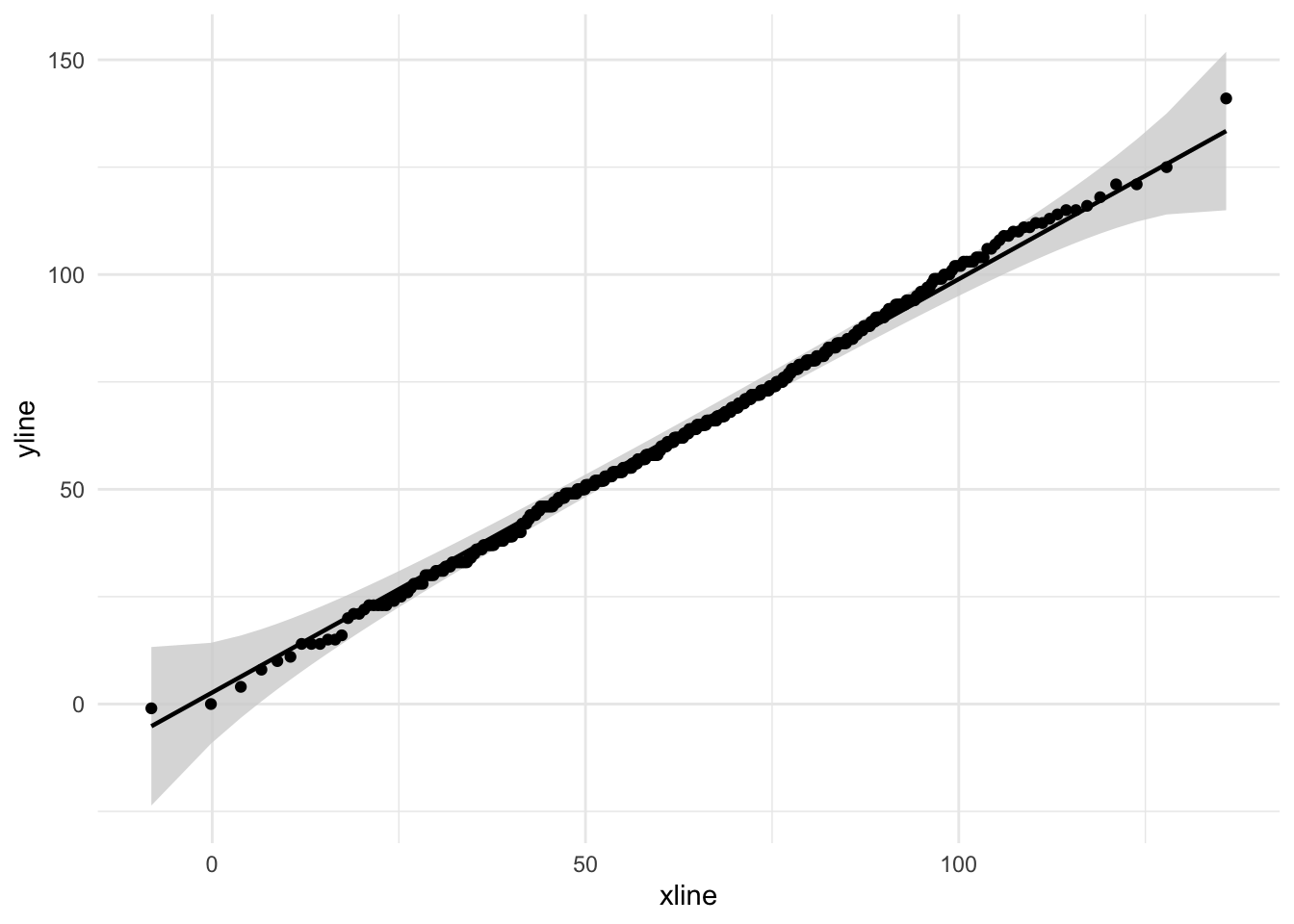

19.4.1.2.3 Advanced qqplot with 95% CI bands

We can also create bands around these plots using the qqplotr library in conjuction with ggplot:

pacman::p_load(qqplotr)

ggplot(tjd_hs_data, aes(sample=student_scores)) +

stat_qq_band(fill="lightgray") + stat_qq_point() + stat_qq_line() +

theme_minimal()

ggplot(skewed_sample, aes(sample=score)) +

stat_qq_band(fill="lightgray") + stat_qq_point() + stat_qq_line() +

theme_minimal()

19.4.2 Calculated measures of skew and kurtosis

Measures of skew and kurtosis may be obtained using the psych::describe() function. There is some debate concerning the interpretation of these values. First let’s obtain skew and kurtosis using the psych::describe() function. From here we’ll walk-though the specifics of what these values are and how to interpret them (and limitations). I’ll finally offer a recommendation I’ll point you in the direction of several resources in this debate as well as summarize key issues as I see them.

First tjd_hs_data:

pacman::p_load(psych)

psych::describe(tjd_hs_data$student_scores, type=2) vars n mean sd median trimmed mad min max range skew kurtosis se

X1 1 500 63.84 23.32 63.5 63.61 22.98 -1 141 142 0.09 -0.03 1.04Alternatively, you can call skew() and kurtosi() seperately:

tjd_hs_data$student_scores %>% skew()[1] 0.08845382tjd_hs_data$student_scores %>% kurtosi()[1] -0.05599991Now skewed_sample:

psych::describe(skewed_sample$score, type = 2) vars n mean sd median trimmed mad min max range skew kurtosis se

X1 1 100 25.11 2.27 24.65 24.81 2.39 22.62 31.51 8.9 0.97 0.26 0.2319.4.2.1 using the non-transformed values

The values of skew and kurtosis are calculated using the three types outlined by Joanes and Gill (1998) here. By default, for psych::describe(), type=3, which corresponds to \(b_1\) and \(b_2\) from Joanes and Gill. Note that type=2 corresponds to the values obtained using SPSS. For the purposes of comparison we will use type=2, though note that each (type=2 or type=3) has its advantages, see Joanes and Gill, 1998 for more detail. As relayed by Kim (2013) here, if one wants to assess skew and kurtosis based upon these raw values, then any value > 2 for skew or > 4 for kurtosis should be viewed as suspect.

19.4.2.2 using the standardized values

However, as Kim notes, one can apply a test of standardized test for normality by dividing the skew and kurtosis by their respective standard errors. Note that SPSS will provide you with this standard error estimate. R by default does not. You may elect to replicate the SPSS method, again based on type=2 calculations, by importing the function found below (taken from Howard Seltman, here). Once you have imported the function spssSkewKurtosis you can simply call spssSkewKurtosis(vector). For example, with skewed_sample:

# Skewness and kurtosis and their standard errors as implemented by SPSS

#

# Reference: pp 451-452 of

# http://support.spss.com/ProductsExt/SPSS/Documentation/Manuals/16.0/SPSS 16.0 Algorithms.pdf

#

# See also: Suggestion for Using Powerful and Informative Tests of Normality,

# Ralph B. D'Agostino, Albert Belanger, Ralph B. D'Agostino, Jr.,

# The American Statistician, Vol. 44, No. 4 (Nov., 1990), pp. 316-321

spssSkewKurtosis <- function(x) {

w=length(x)

m1=mean(x)

m2=sum((x-m1)^2)

m3=sum((x-m1)^3)

m4=sum((x-m1)^4)

s1=sd(x)

skew=w*m3/(w-1)/(w-2)/s1^3

sdskew=sqrt( 6*w*(w-1) / ((w-2)*(w+1)*(w+3)) )

kurtosis=(w*(w+1)*m4 - 3*m2^2*(w-1)) / ((w-1)*(w-2)*(w-3)*s1^4)

sdkurtosis=sqrt( 4*(w^2-1) * sdskew^2 / ((w-3)*(w+5)) )

mat=matrix(c(skew,kurtosis, sdskew,sdkurtosis), 2,

dimnames=list(c("skew","kurtosis"), c("estimate","se")))

return(mat)

}

# skewed_sample

spssSkewKurtosis(skewed_sample$score) estimate se

skew 0.9736209 0.2413798

kurtosis 0.2625518 0.4783311Also note that you can source this custom code to your environment whenever you want using:

# I typically source files in the first chunk as well

source("http://www.stat.cmu.edu/~hseltman/files/spssSkewKurtosis.R")From here we could divide each estimate by their se and apply the critical values as recommended by Kim:

spssSkewKurtosis(skewed_sample$score)[,1]/

spssSkewKurtosis(skewed_sample$score)[,2] skew kurtosis

4.0335643 0.5488912 According to Kim:

- if \(N\) < 50, any value over 1.96

- if (50 < \(N\) < 300), any value over 3.29

- if \(N\) > 300, no transform: any value of 2 for skew and 4 for kurtosis.

Both skew and kurtosis fail the normal test. Compare this to tjd_hs_data:

spssSkewKurtosis(tjd_hs_data$score)[,1]/

spssSkewKurtosis(tjd_hs_data$score)[,2]Warning: Unknown or uninitialised column: `score`.Warning in mean.default(x): argument is not numeric or logical: returning NAWarning: Unknown or uninitialised column: `score`.Warning in mean.default(x): argument is not numeric or logical: returning NA skew kurtosis

NA NA Both absolute values are below 3.29.

19.4.2.3 using a bootstrapped standard errors (recommended)

There is a caveat to the test method from the previous section. The standard errors calculated above work for normal distributions, but not for other distributions. Obviously this is a problem, as by definition we are using the above method, including the standard errors, to test for non-normality. If our distribution is non-normal, then the standard error that we are using to test for non-normality is suspect!! See here for a brief discussion. And a solution, DescTools!!

The solution involves bootstrapping the 95% confidence interval and estimating the standard error. DescTools::Skew() and DescTools::Kurt() both provide this option, using ci.type="bca". This method is also recommended by Joanes and Gill. Note that here method refers to the type of skew and kurtosis calculation (again, keeping it 2 for the sake of consistency, although 3 is fine as well).

pacman::p_load(DescTools)

# bootstrap with 1000 replications (R=1000)

DescTools::Skew(x = skewed_sample$score, method = 2, ci.type = "bca", conf.level = 0.95, R = 1000) skew lwr.ci upr.ci

0.9736209 0.6342950 1.3622641 from here, the standard error may be estimated by taking the range of the bootstrapped confidence interval and dividing it by 3.92

bca_skew <- DescTools::Skew(x = skewed_sample$score, method = 2, ci.type = "bca", conf.level = 0.95, R = 1000)

ses <- (bca_skew[3]-bca_skew[2])/3.92

ses %>% unname() # unname simply removes the name[1] 0.1783177From here we can take the obtained skew and divide it by the standard error, ses.

DescTools::Skew(x = skewed_sample$score, ci.type = "bca", conf.level = 0.95, R = 1000)[1] / ses skew

5.297325 Similar steps can be taken for kurtosis:

bca_kurtosis <- DescTools::Kurt(x = skewed_sample$score, ci.type = "bca", conf.level = 0.95, R = 1000)

ses <- (bca_kurtosis[3]-bca_kurtosis[2])/3.92

DescTools::Kurt(x = skewed_sample$score, ci.type = "bca", conf.level = 0.95, R = 1000)[1]/ses kurt

0.2445964 The logic of this. Consider that we have bootstrapped a distribution of skews and or kurtosi just as we have done in the past with means. From class we know that the standard error equals the standard deviation of this distribution. The 95% CI obtained from bootstrapping are equivalent to ±1.96 * the standard deviation. Therefore we can obtain the standard error by dividing the width of the 95% CI by 2 * 1.96 or 3.92.

Admittedly, coding this by hand is a bit of a chore, but the beauty of programming is that we can create our own functions for processes that we routinely perform.

In this case I want to input a vector like skewed_sample$score and have it return the bootstrapped ses and the resulting skew and kurtosis.

I’ll name this function skew_kurtosis_ses. Notice how I’m just taking the steps that I’ve performed before and wrapping them in the function:

# Skewness and kurtosis and their standard errors as determined

# using boostrapped confidence intervals

# Arguments:

# - x: vector of data you wish to analyze

# - calc_method: method of calculating skew and kurtosis, used by DescTools, defaults to "2"

# - calc_ci: how to derive conf. intervals, for DescTools, default "bca"

# - reps: How many bootstrap repetitions, recommend at least 1000

# Result: a 2x2 matrix with standardized skew and kurtosis (z)

# as well as critical values to compare against.

# Values as recommended by Kim (2013) Statistical notes for clinical

# researchers: assessing normal distribution (2) using skewness and kurtosis.

skew_kurtosis_z <- function(x, calc_method = 2, calc_ci = "bca", reps = 10000){

# get skew and kurt and their cis

skew_ci <- DescTools::Skew(x, method = calc_method, ci.type = calc_ci,

conf.level = 0.95, R = reps)

kurt_ci <- DescTools::Kurt(x, method = calc_method, ci.type = calc_ci,

conf.level = 0.95, R = reps)

# calculate ses from ci

skew_ses <- (skew_ci[3] - skew_ci[2])/3.92

kurt_ses <- (kurt_ci[3] - kurt_ci[2])/3.92

# calculate standardized valuess

skew_z<- skew_ci/skew_ses

kurt_z<- kurt_ci/kurt_ses

# remove names and ci columns

skew_z <- unname(skew_z)[1]

kurt_z <- unname(kurt_z)[1]

values <- data.frame("values" = rbind(skew_z,kurt_z))

# what are the critical values?

N <- length(x)

crit_vals<- ifelse(N<50, 1.96,

ifelse(N<300, 3.29)

)

round(cbind(values,crit_vals),digits = 2) # round to 2 digits

}Now to test my function:

skew_kurtosis_z(x = skewed_sample$score, calc_method = 2, calc_ci = "bca", reps = 1000) values crit_vals

skew_z 5.33 3.29

kurt_z 0.47 3.29For those interested this function can be found on my github.com page, here, and sourced like so:

source("http://tehrandav.is/courses/statistics/custom_functions/skew_kurtosis_z.R")You can also save it to your own computer and source it from there as needed.

Keep in mind since we are bootstrapping from random selection your absolute magnitudes will vary.

19.4.3 Significance Tests

19.4.3.1 Shapiro-Wilks

The formal normality tests including Shapiro-Wilks test may be used from small to medium sized samples (e.g., n < 300), but may be unreliable for large samples. It outputs a test statistic W that is tested against deviations from 1. This can be run by:

shapiro.test(tjd_hs_data$student_scores)

Shapiro-Wilk normality test

data: tjd_hs_data$student_scores

W = 0.99801, p-value = 0.8315FWIW, you’ll notice from your readings that Field recommends AGAINST using this method. I’m inclinded to concur. If you want to use a quantification test, I would recommend the Bootstrapped Standard Errors method from above.

19.4.3.2 Kolmogorov-Smirnov and other tests

Indeed there are other tests for normality. I don’t really recommend any of them though, so let’s move on to our next assumption.